Update Dec 1st : Due to an error in the average performance graphs, some results were slightly altered. Those have now been corrected and do not change the conclusion of the product and the representation of the card stays unaffected. All in-game results are also unaffected.

It is now time! Intel ARC A770 and A750 are ready to be sold to gamers and are widely available to the public. The two new products from team blue have a really rough landing against Nvidia and AMD that both have over a decade of experience making graphics cards for gaming. Today we will be comparing the ARC A770 Limited Edition to several other GPUs from both AMD and Nvidia.

As a reminder, we do not (yet) receive review samples for these products, so all options you will see here are options I personally own or that is paid by our own pockets. This means we have to wait for the cards to be available on the market to buy them and have them delivered (it doesn’t help that my order was stuck for over two weeks due to a supply issue apparently) before we can review them. On the bright side, this allows this review to be truly impartial.

The ARC A770 GPU

If you missed the A770 unboxing we did a few days ago, you can catch up here. This is the Intel “reference” model, which is designed entirely by Intel themselves. Here are the major specs of the card :

| Graphics card | Arc A770 Limited Edition |

| Codename | Alchemist |

| Lithography | TSMC N6 |

| Graphics clock | 2100 MHz |

| Graphic memory | 16 GB GDDR6 |

| Memory Interface | 256 bit |

| Memory Bandwidth | 560 GB/s |

| Memory Speed | 17.5 Gbps |

| PCI Express | 4.0 |

| TBP | 225w |

| MSRP | 349$ usd |

More specification on Intel’s product page.

Although the specs on paper are not bad, there seems to be a few disappointing points. The first obvious one is that the price in Europe is sadly very steep. I had to pay 460 euros for that one model with VAT included, which puts it in a very bad position considering i can find AMD’s RX 6700 for cheaper and some RTX 3060TI’s not too far up. This already makes it a hard pick here against the competition.

The 225w TBP is also a bit high, but considering this is the first generation, i am not surprised. There is room for improvements and 225w is still very acceptable for most people.

The 16 GB of vram at this price point is a good bonus however. Most of the competing options use 8 GB of GDDR6 except for the 3060 which uses 12 – still lower than Intel. Intel also uses a bigger bus at 256 bit whereas Nvidia and AMD seem to use mostly 128 bit for their cards at this price point.

Another downside for Intel is the lack of support for older version of DirectX. Only DX12 Ultimate is listed as supported, and that will show later in the benchmarks. Thankfully, Vulcan, OpenGL and OpenCL are supported as well.

The last point worth mentioning, this card does have H264, H265 and Av1 encoding and decoding, which is nice to see.

The test system

Here is the Full system used for benchmarking all graphics cards :

| GPU tested | AMD GPU | Intel GPU | Nvidia GPU |

| CPU | Intel Core i7 12700k | Intel Core i7 12700k | Intel Core i7 12700k |

| Motherboard | MSI Z690 Tomahawk Wifi DDR4 | MSI Z690 Tomahawk Wifi DDR4 | MSI Z690 Tomahawk Wifi DDR4 |

| Bios version | 1.80 | 1.80 | 1.80 |

| Cooling | Arctic Liquid Freezer 2 360mm | Arctic Liquid Freezer 2 360mm | Arctic Liquid Freezer 2 360mm |

| RAM | 2×8 GB Crucial Ballistix 3600cl16 | 2×8 GB Crucial Ballistix 3600cl16 | 2×8 GB Crucial Ballistix 3600cl16 |

| GPU driver version | 22.10.3 | 31.0.101.3802 | 526.86 |

| Storage | Samsung 970 Evo Plus | Samsung 970 Evo Plus | Samsung 970 Evo Plus |

| Case | Be quiet! Silent Base 802 | Be quiet! Silent Base 802 | Be quiet! Silent Base 802 |

| Power supply | MSI A650GF | MSI A650GF | MSI A650GF |

All benchmarks are done on a Windows 11 22h2 installation with all the included updates. DDU is used when changing GPU vendor to ensure no driver conflict with each other.

Here are the different graphics cards used in this review :

Nvidia RTX 3070 Founders edition

Sapphire RX 5700XT Pulse

PowerColor RX 5600XT ITX

MSI RX 460 ITX (Note : this card has been deshrouded and cooled with a Noctua NF-A9x14 plugged into the motherboard as the original fan died).

All cards have been benchmarked at both 1920×1080 and 2560×1440 definitions. Each value is an average of at least 3 runs or more depending the games. Vsync is always disabled.

All hardware is running at stock settings except for the RAM running at XMP at gear 1 and the CPU running without power limits. Two separate EPS cables have been used for CPU power and two separate PCIe cables have been used for GPUs that require more than one.

The Intel A770 has a 190w default power limit by default, which was not changed for this review.

Gaming benchmarks

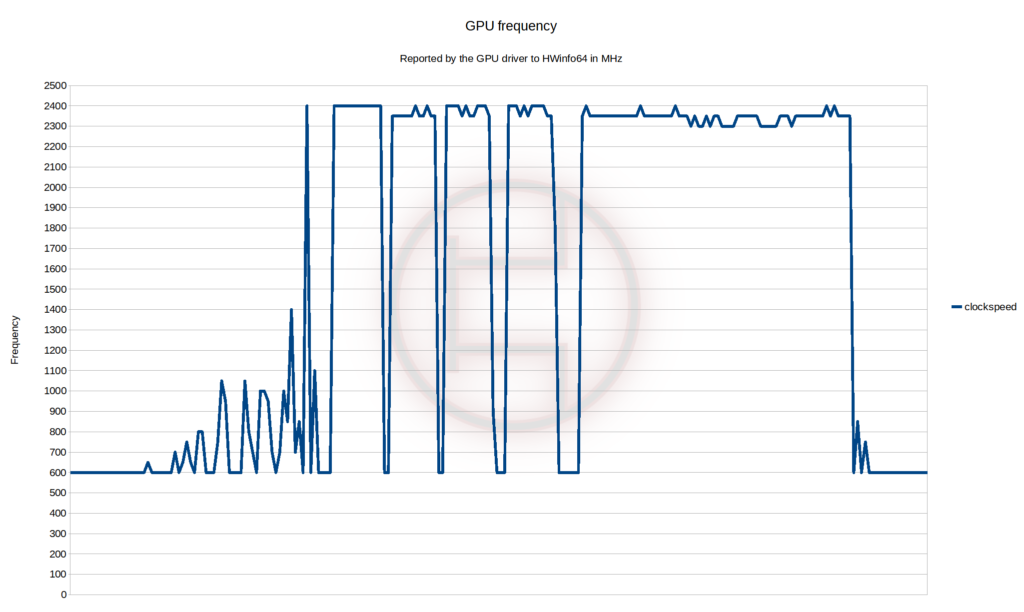

A little notice before beginning all the gaming benchmarks. After seeing the card boost to 2400MHz, i decided to de monitor it and this is the result running a Cyberpunk77 benchmark. The “2100MHZ” boost advertised by Intel seems to be conservative as the card was basically boosting to 2400MHz for the entirety of the review. I’m not complaining about this, I am just noting this behavior looks like the normal, default behavior out of the box.

1920×1080 benchmarks

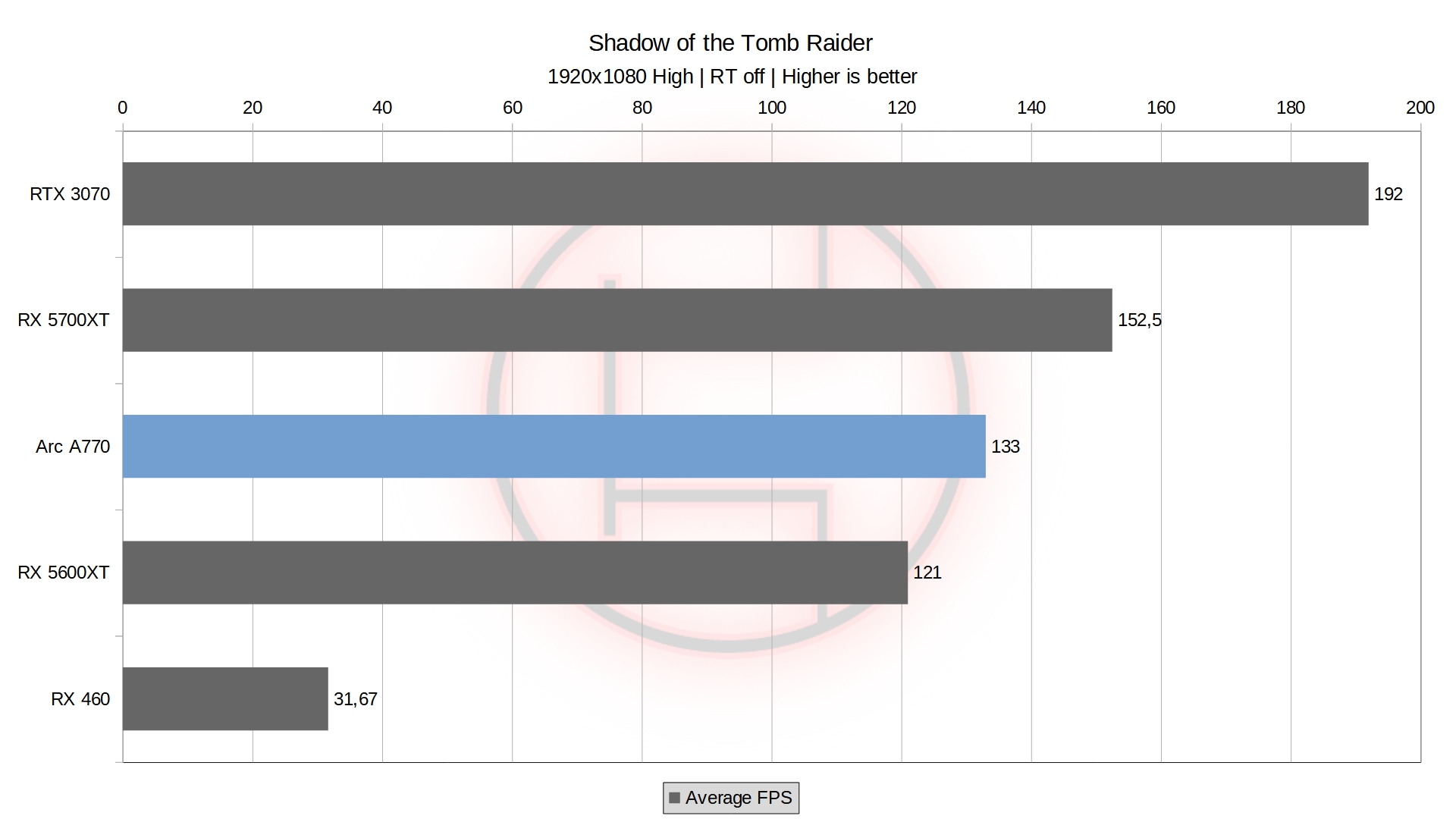

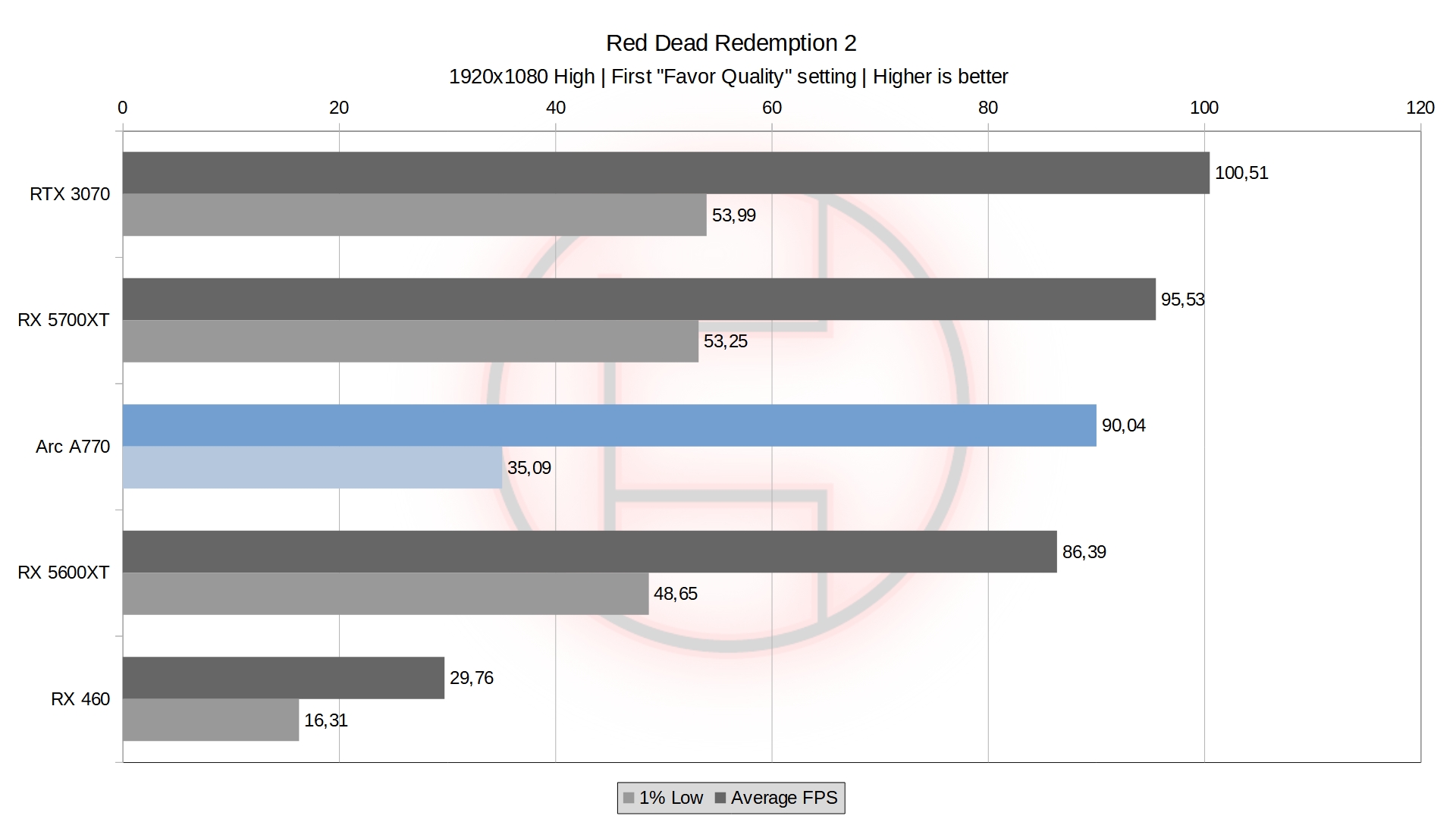

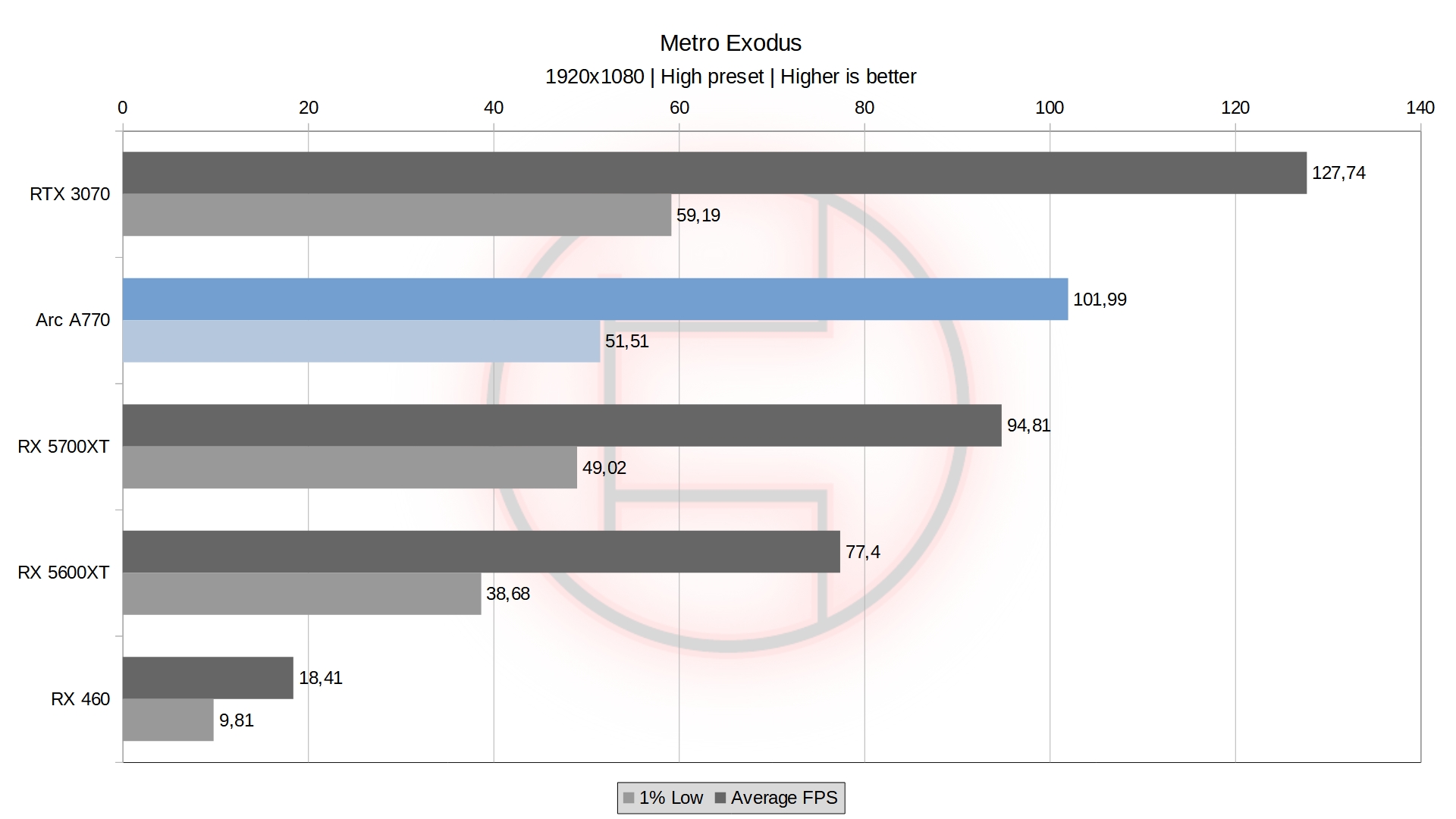

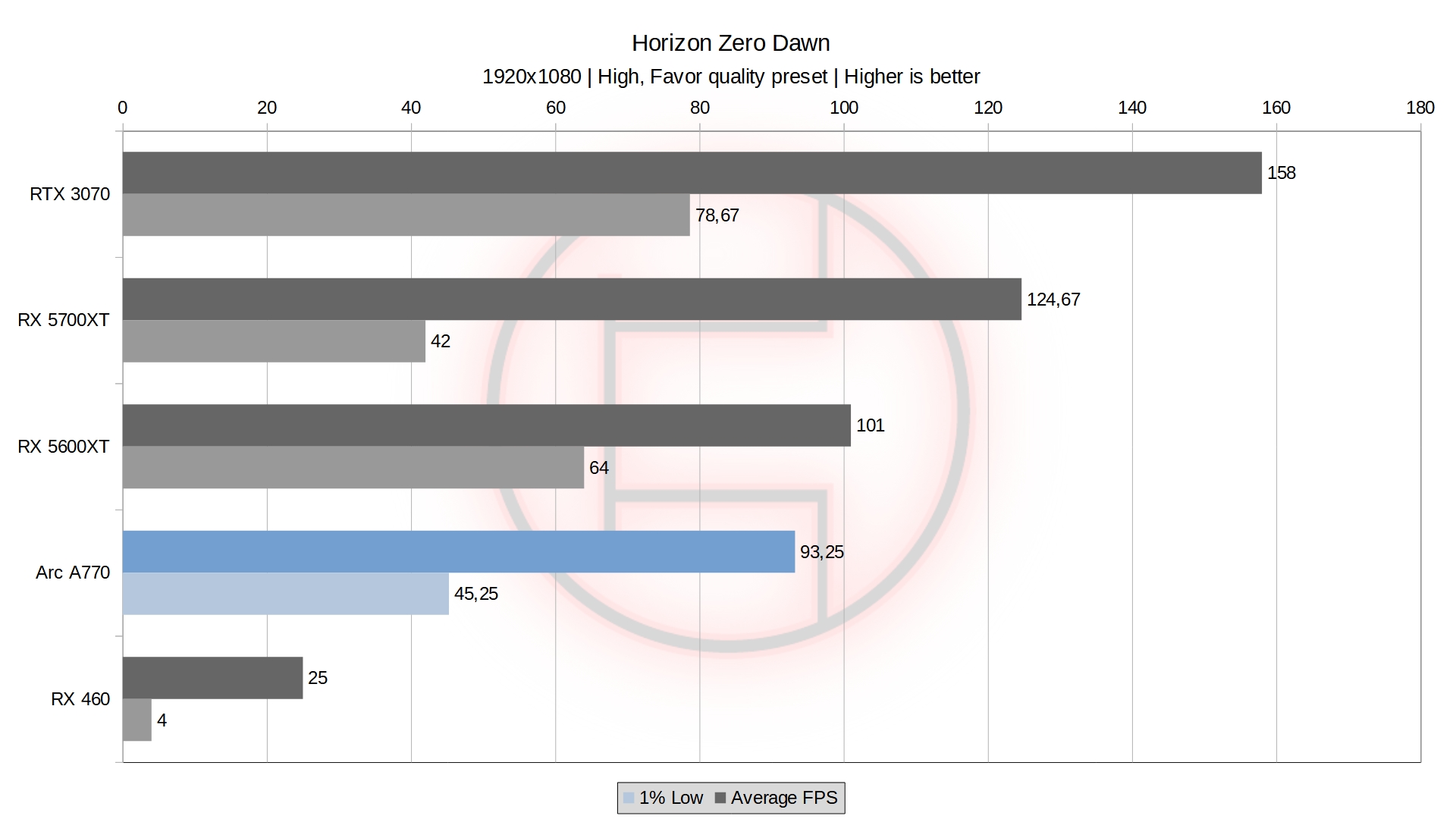

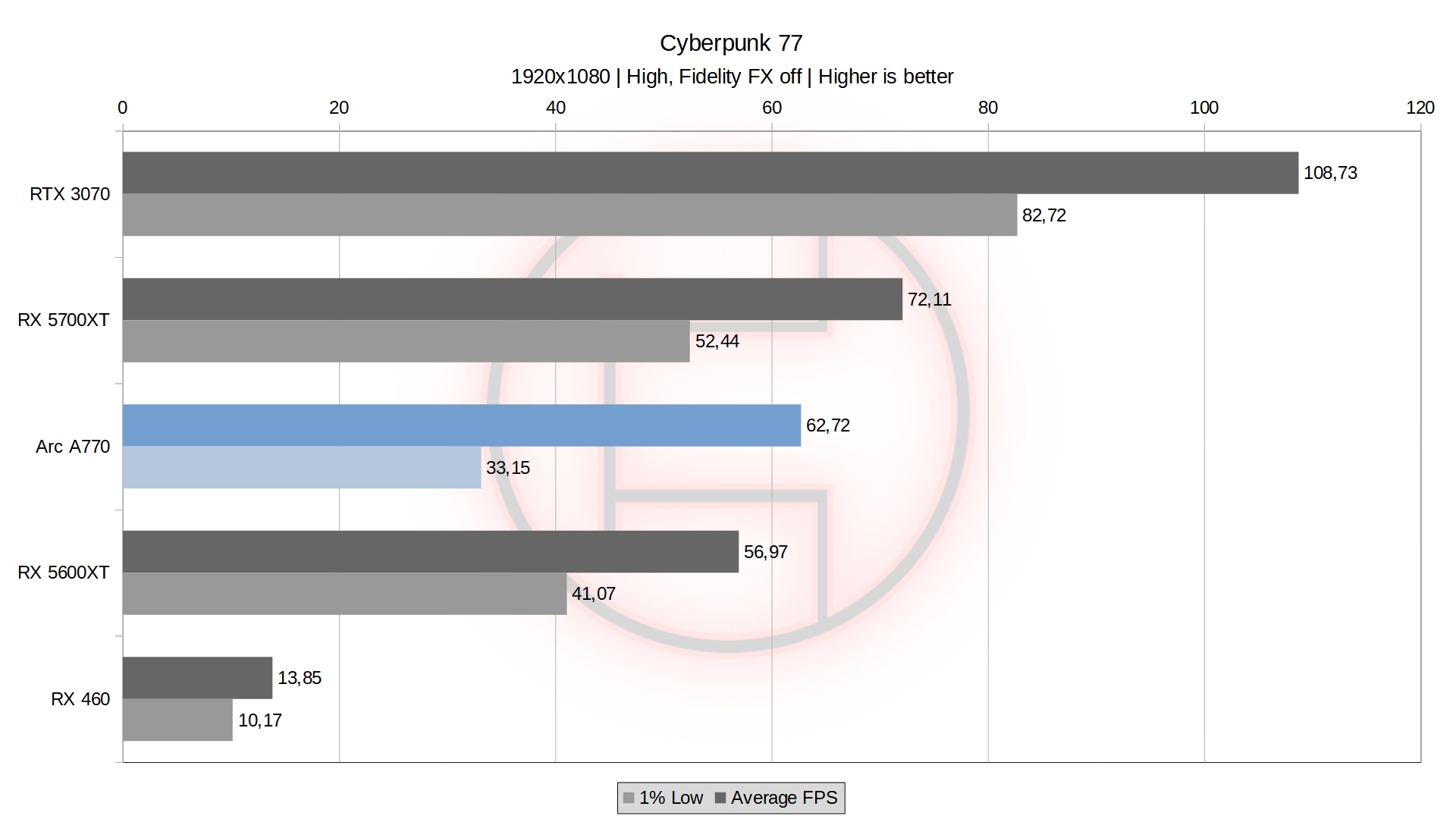

The results here are inconsistent to say the least. The card jumps from beating both my 5700XT and my 5600XT to scoring lower than both depending the game.

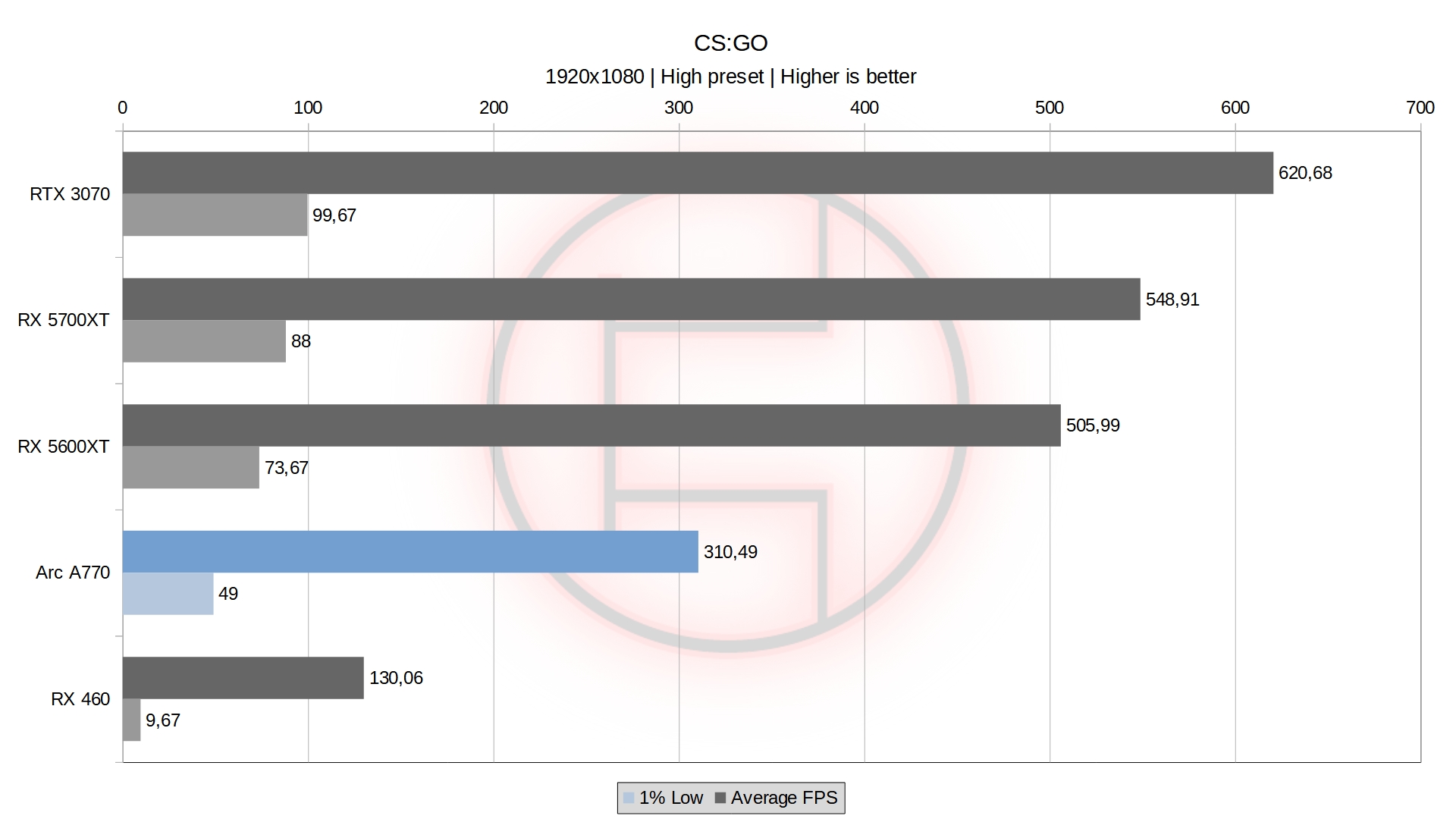

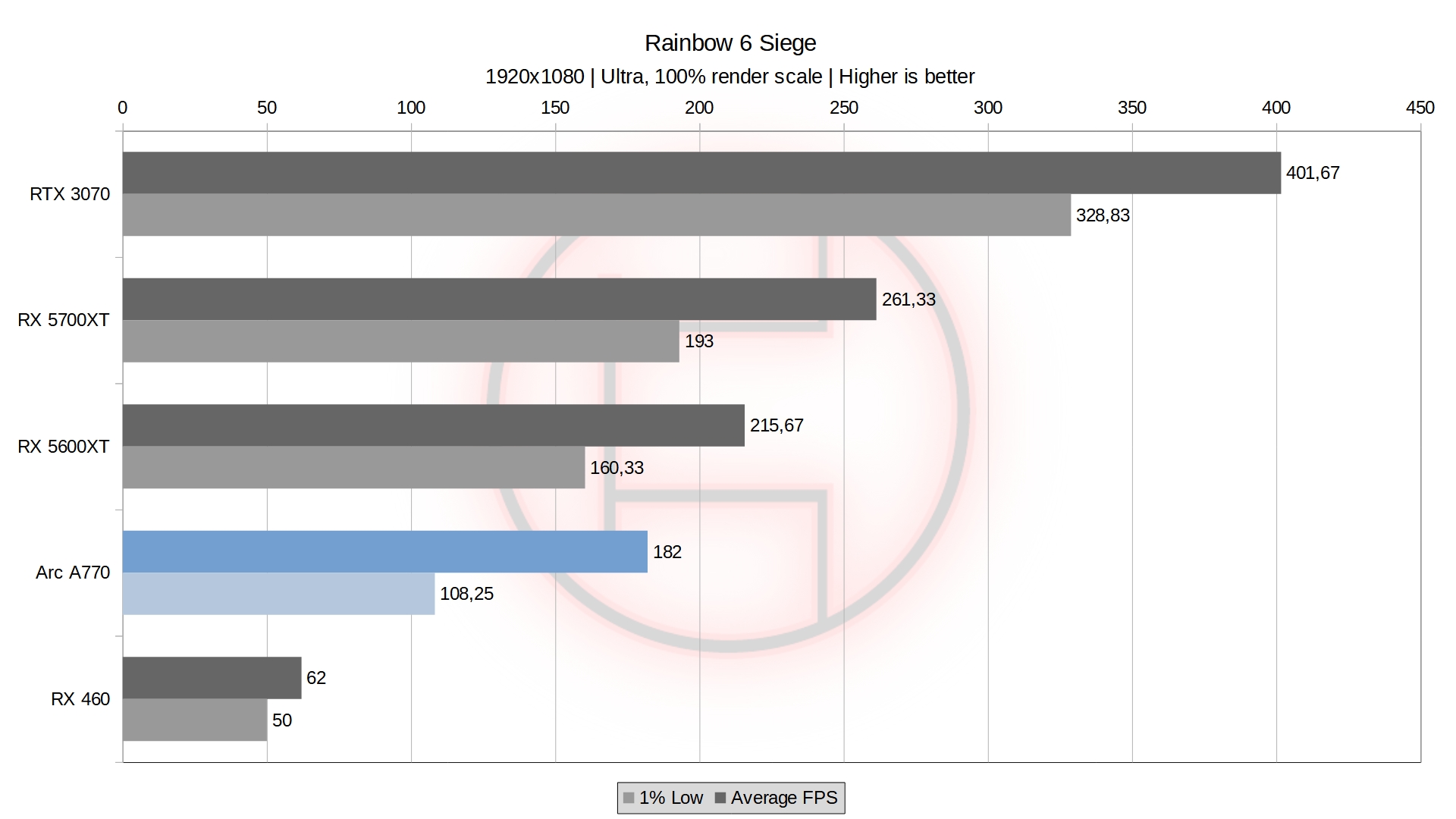

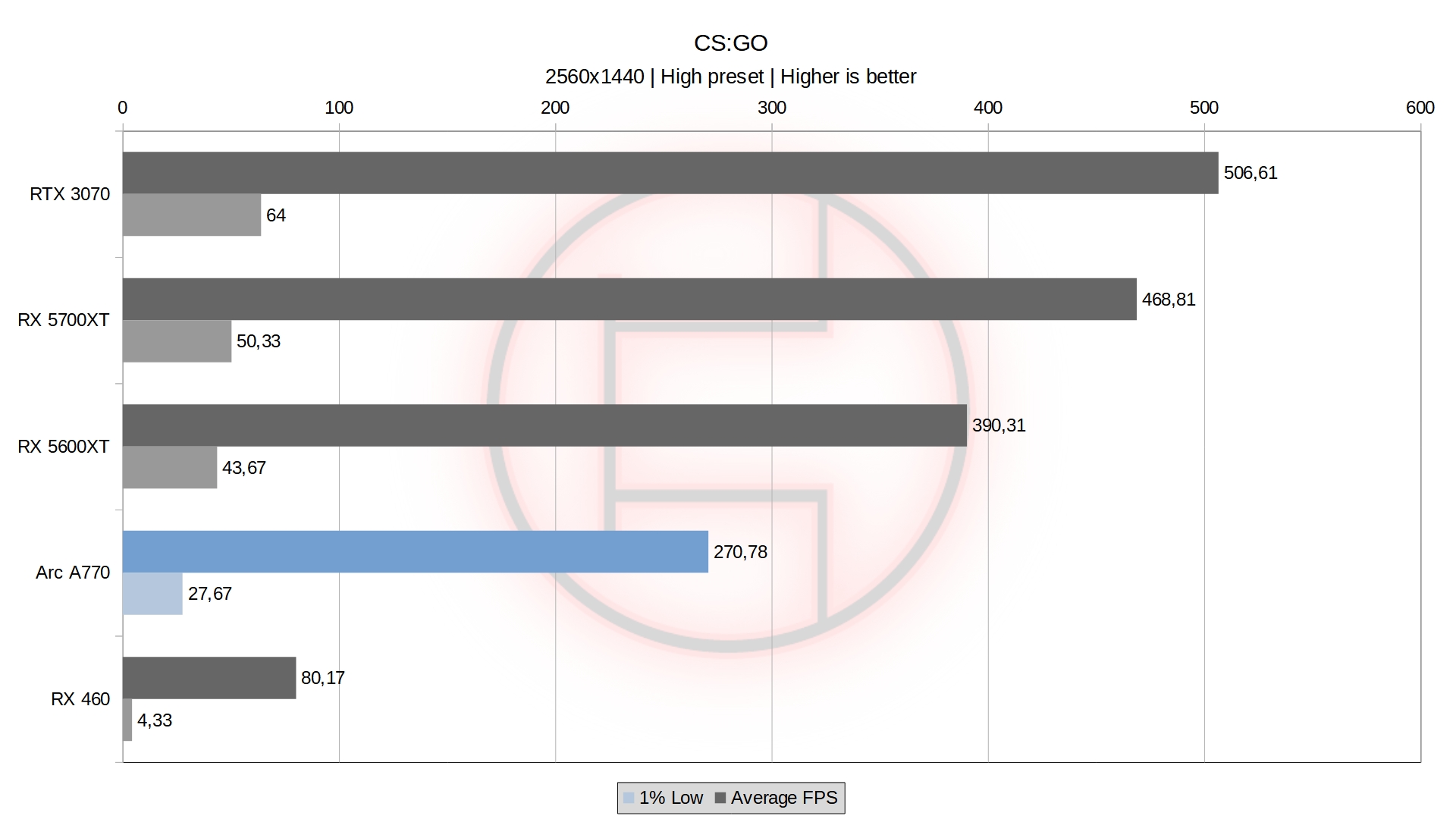

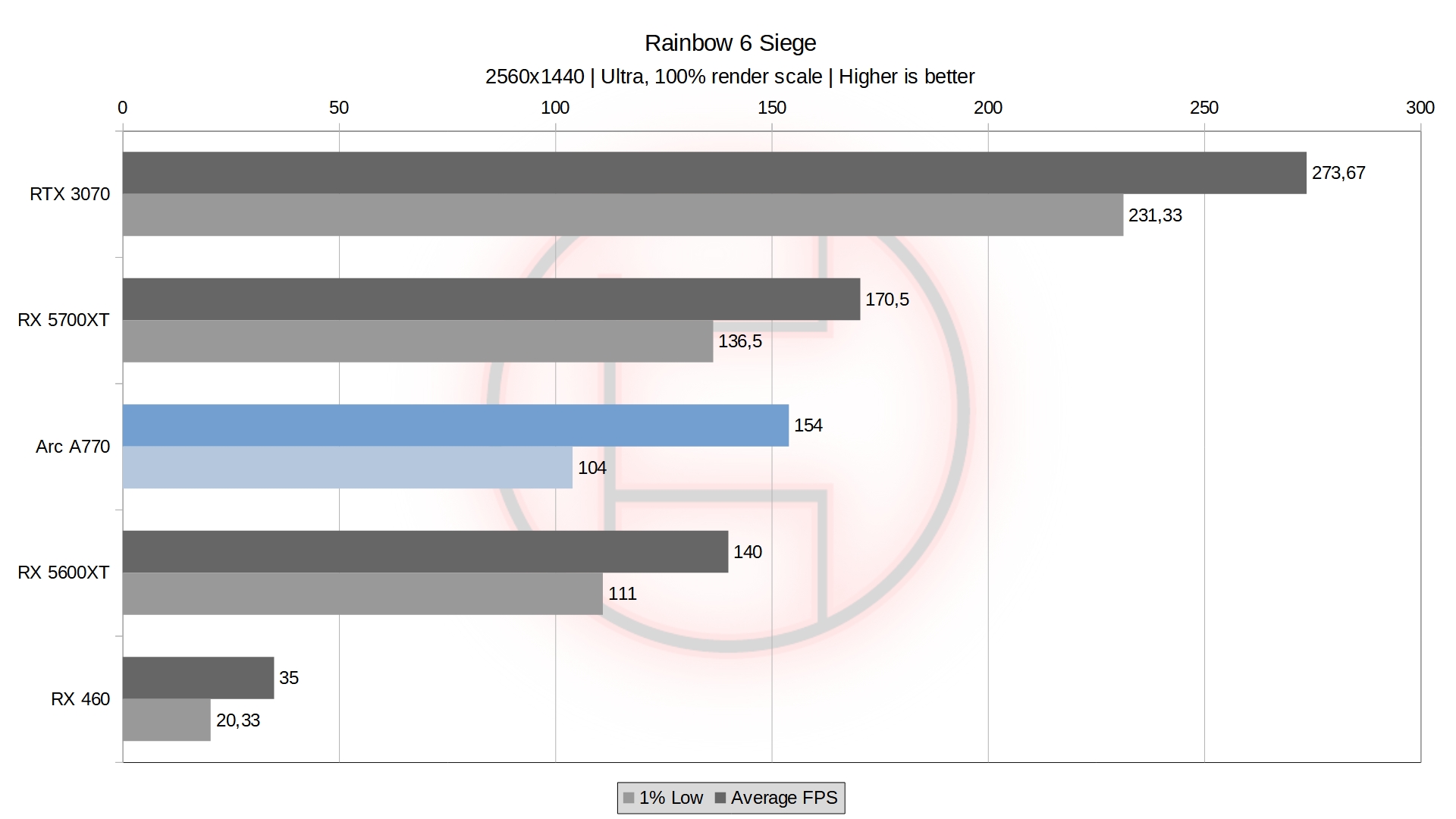

It is notable down in CS:GO and Rainbow 6 siege because the games runs an older API which the A770 does worse. Even if it still manages very playable fps, this is one of the area where Intel has lots of room to improve.

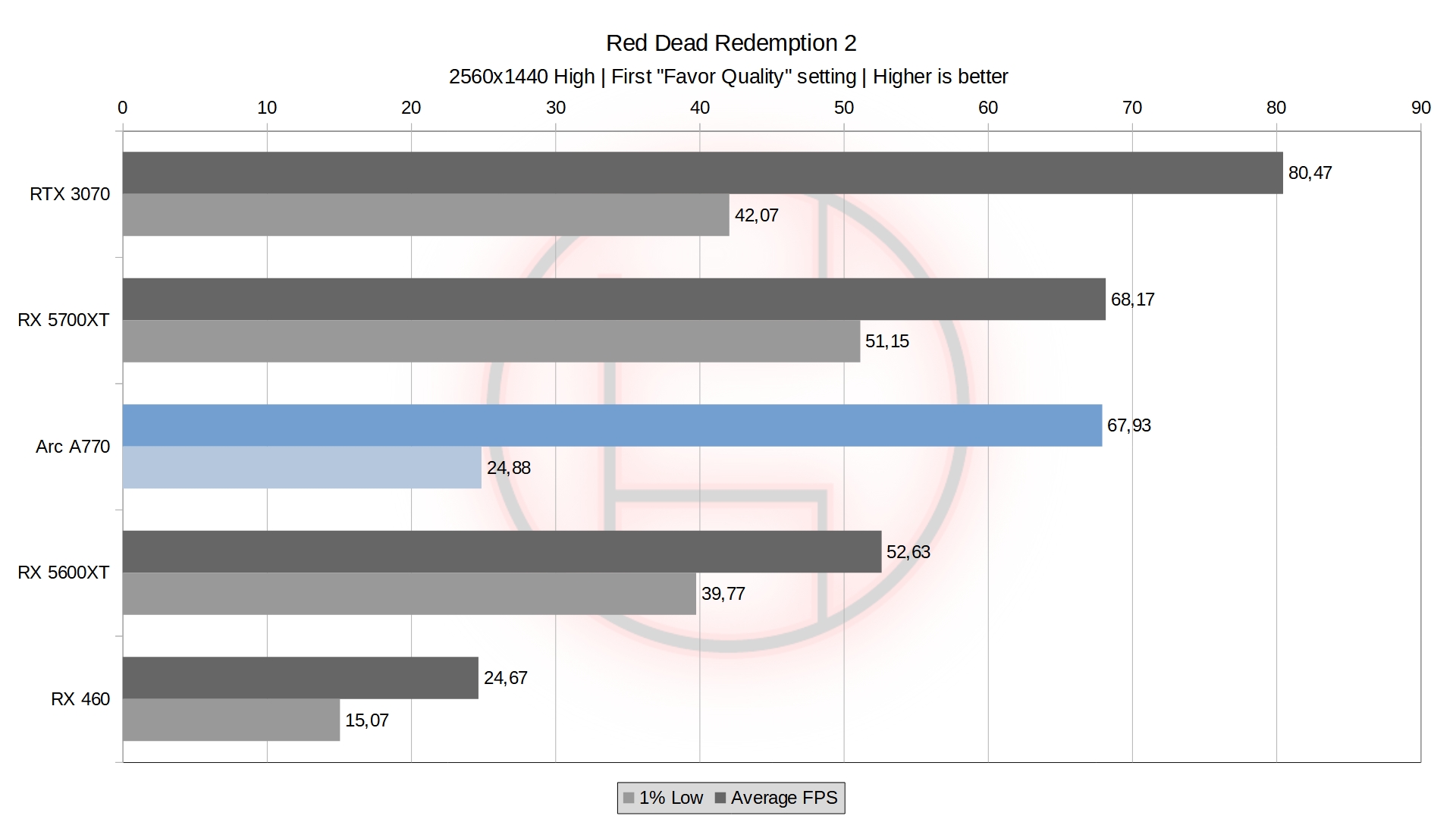

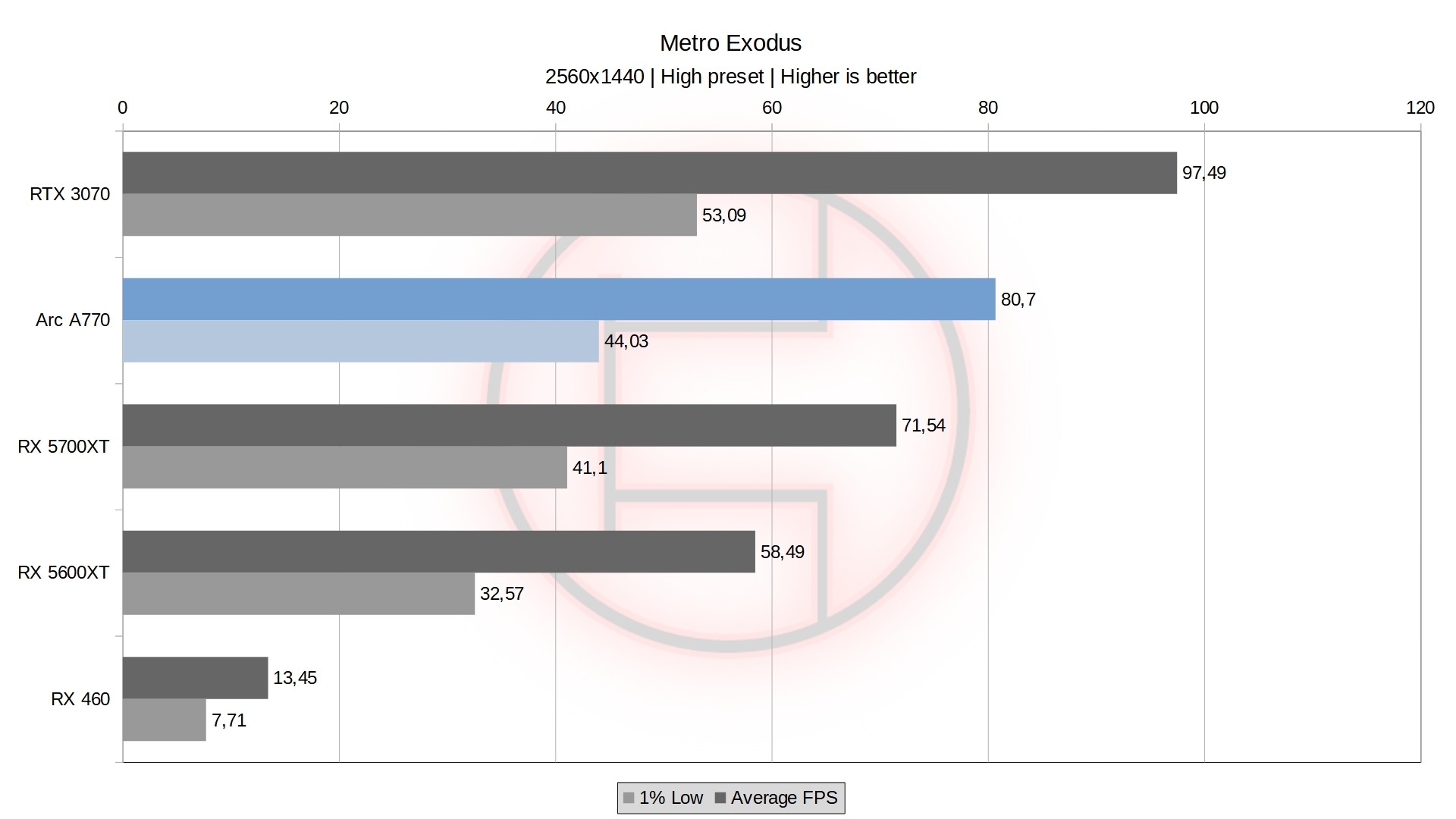

It surprised me in Metro Exodus where only only the 3070 beats it. Even if close to the two RDNA card, a win is a win. On the other hand, it loses in Horizon Zero Dawn – just behind the 5600XT. The 1% low fps are also not very consistent, with the A770 having worse result there in Read Dead Redemption 2 and Cyberpunk than the 5600XT despite beating it in average fps.

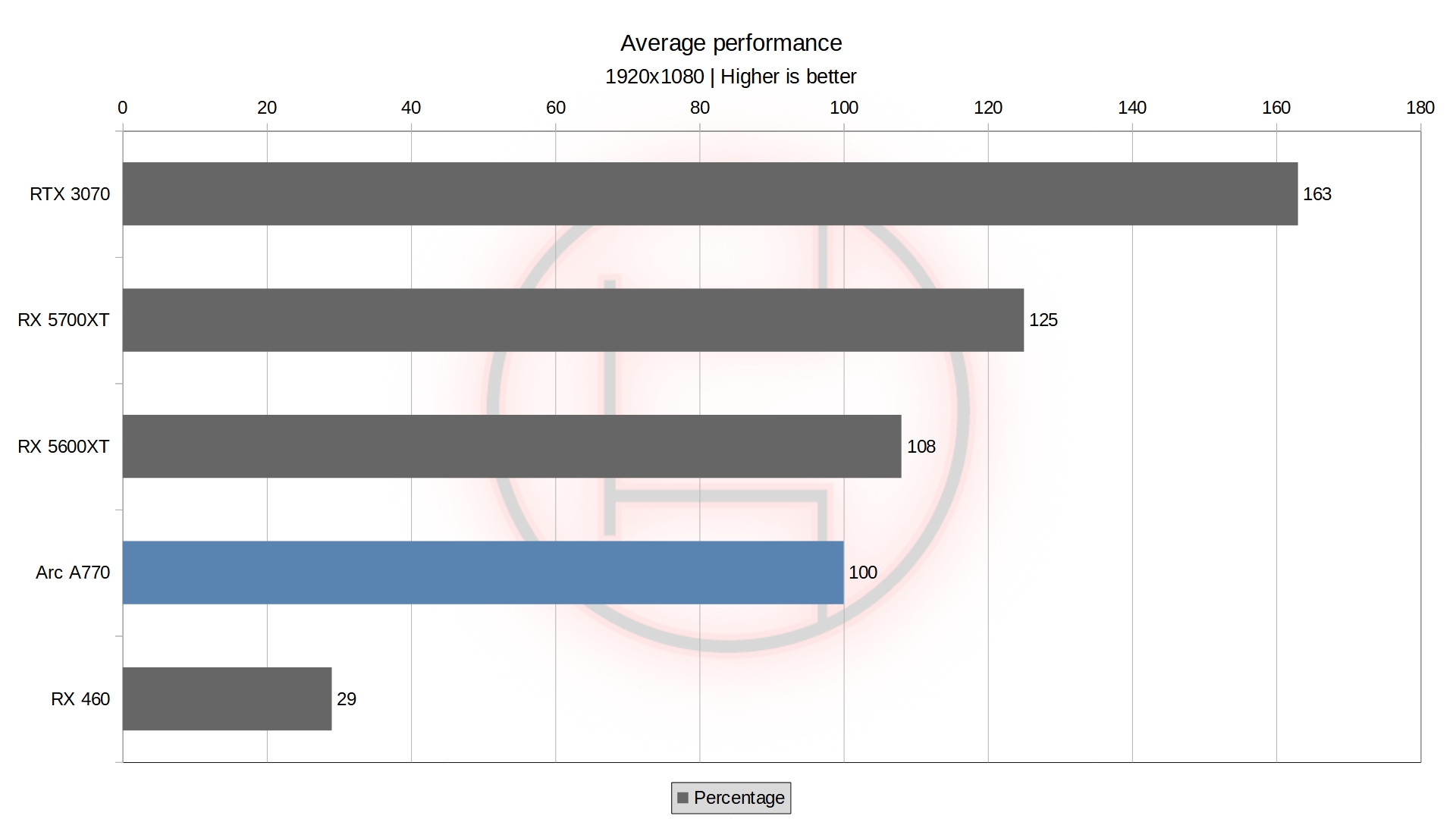

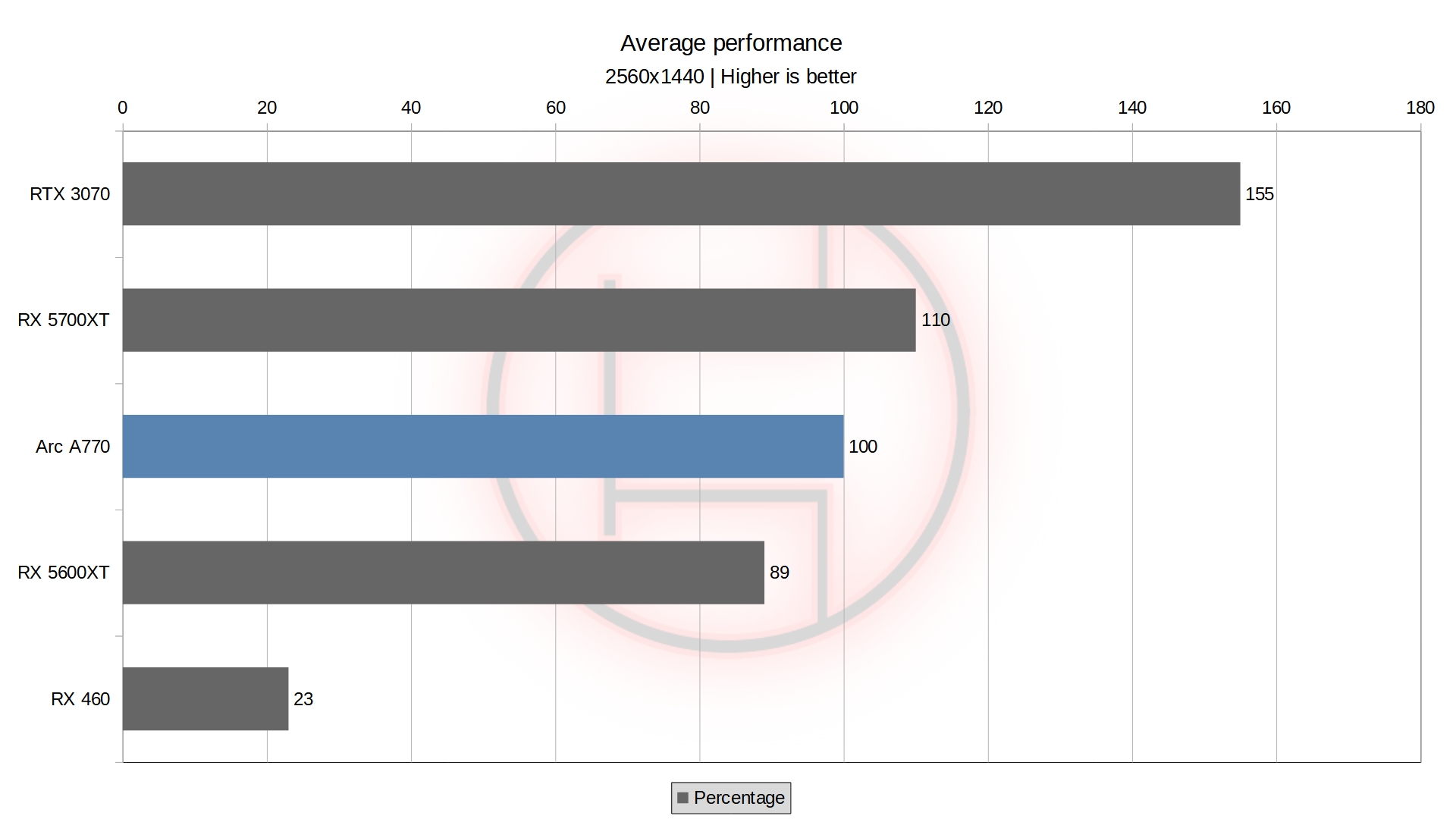

The A770 ends up 8% behind the 5600XT at 1920×1080, which is quite a bad result considering an RX 6600 has similar performance to the 5600XT under 350 euros.

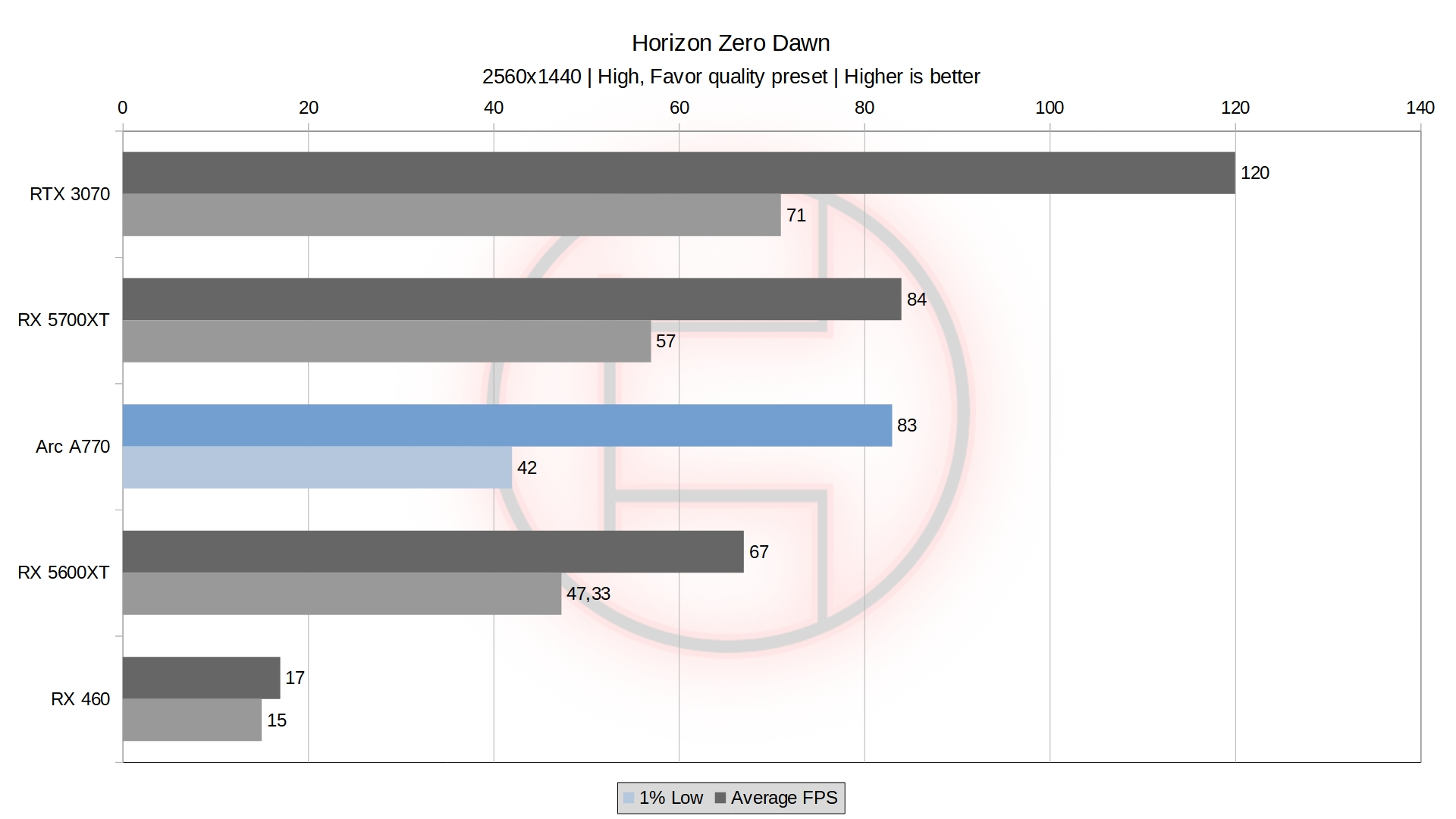

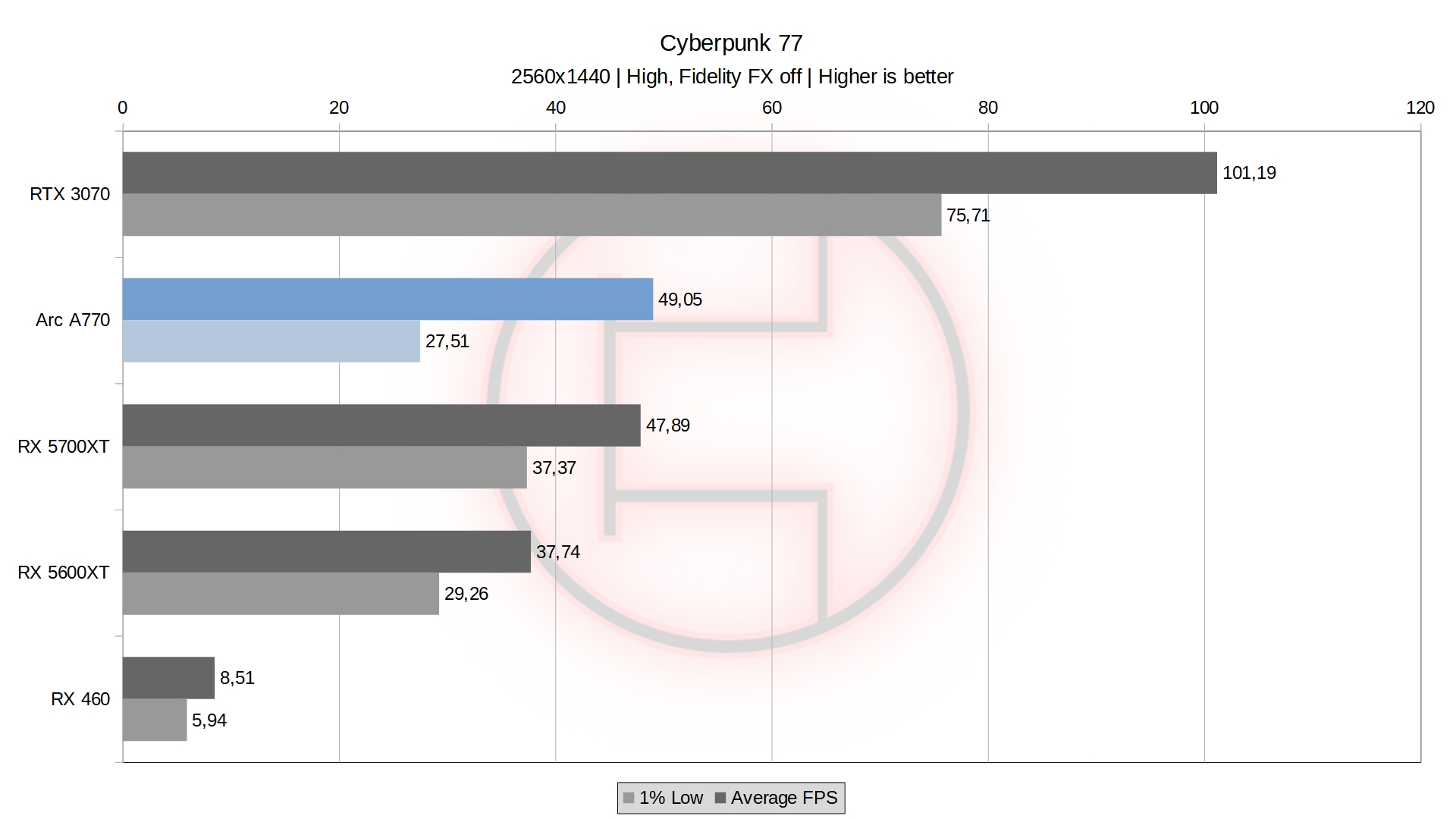

2560×1440 benchmarks

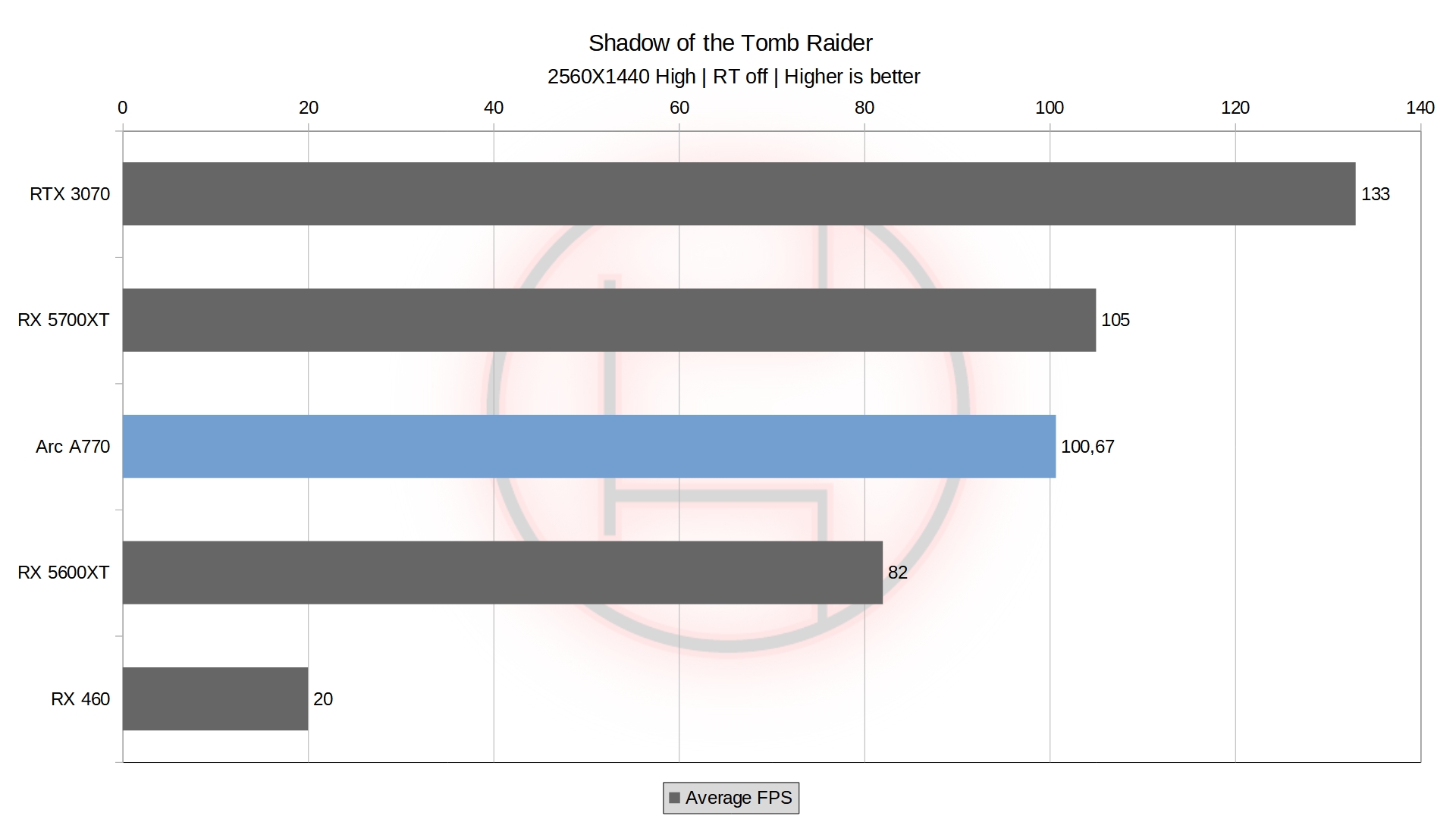

At 2560×1440, the results are slightly better for Arc. The A770 now only loses to the 5600XT in CS:GO which is still expected, even if 270 fps average still qualifies as a nice playing experience.

The Intel card still stays mostly in the middle of the stack as expected, however it closes the gap with the 5700XT where it was previously behind, or even increases it if it was beating it like in Metro. It even manages to beat the 5700XT in Cyberpunk77 which was quite a surprise.

Overall the gap shrinks significantly. The A770 is now 10% behind the 5700XT and 55% behind the 3070. It was previously 25% and 64% behind the two cards respectively at 1920×1080. Clearly this card seem to do much better at this resolution. But even then, considering the 6600XT is better at a cheaper price in Europe, it is still hard to recommend. In the US however, it is a bit better a 350$, even if 6600XT get discounted often.

Other benchmarks

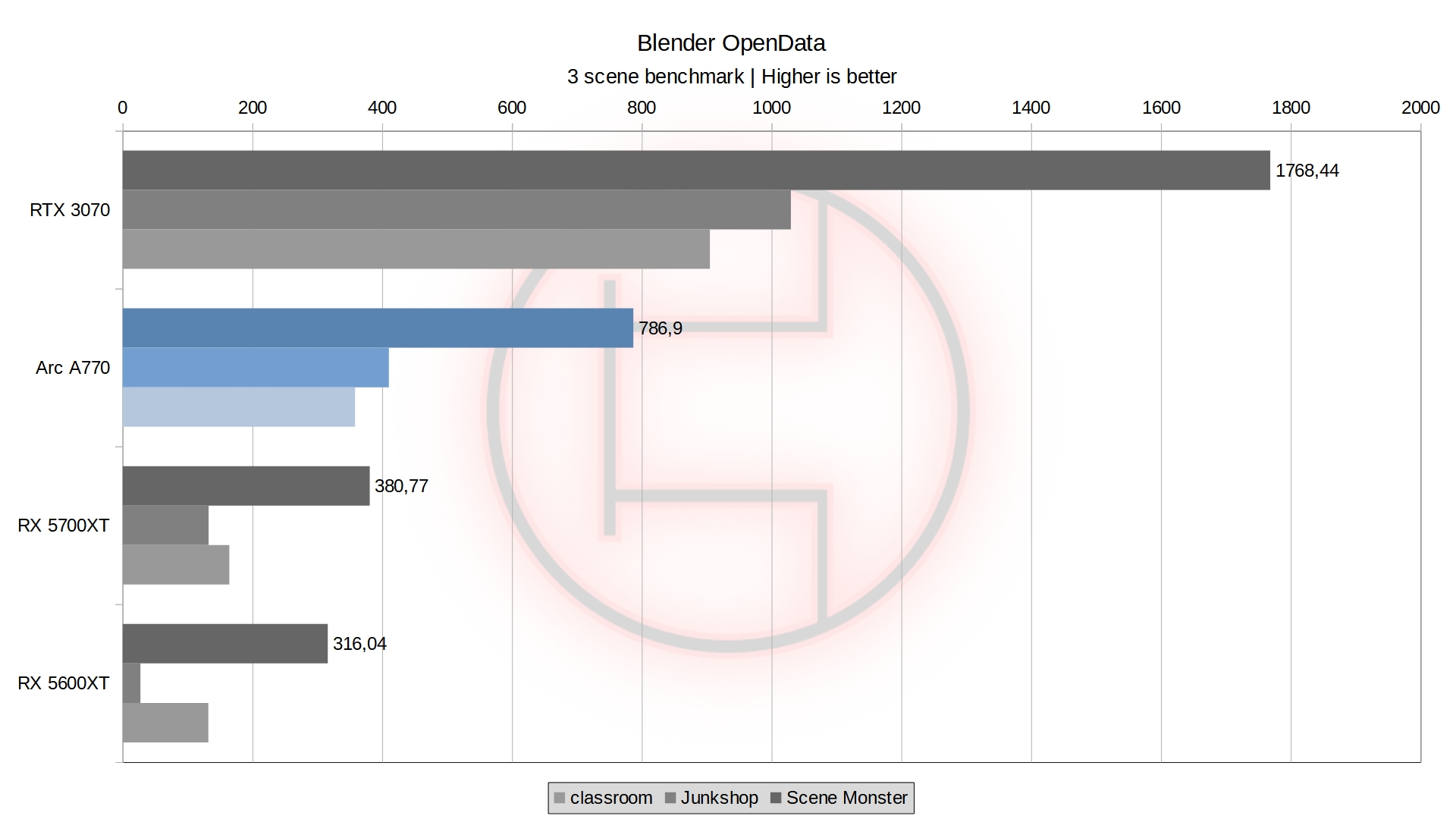

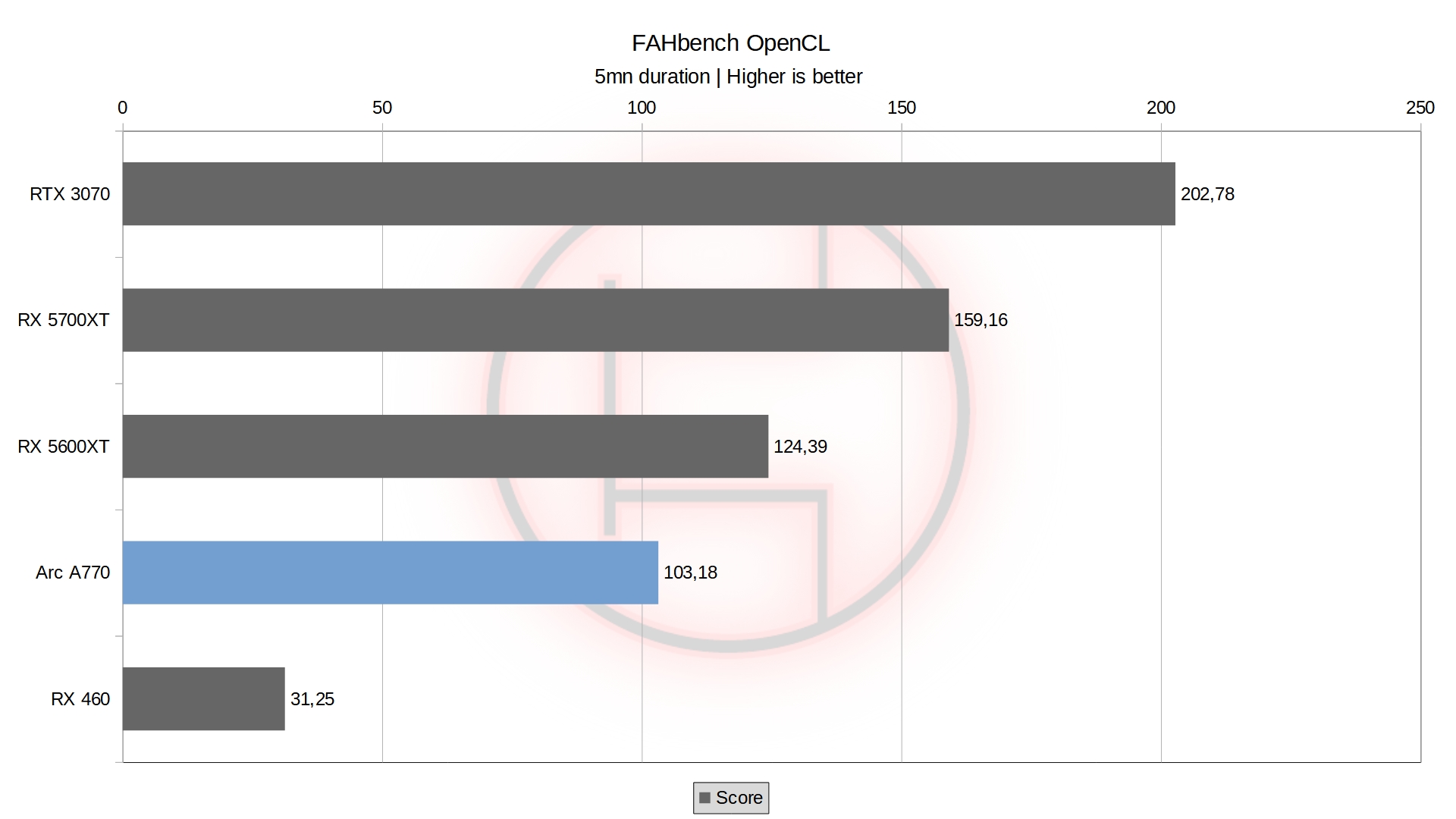

I quickly ran Blender OpenData to see and FAHbench as i was curious about the OpenCL performance and here are the results :

While the performance in Blender is not terrible, it is massively outclassed by the RTX 3070 which makes Nvidia the clear winner for such applications here. Especially since i got my 3070 FE at 550 euros, not even 100 euros more than the ARC A770 for more than twice the performance. As for OpenCL performance, it is down the stack, behind the 5600XT.

Just as a precision, i could not get Blender to run on my RX 460, it simply was not appearing in the device list.

Power readings

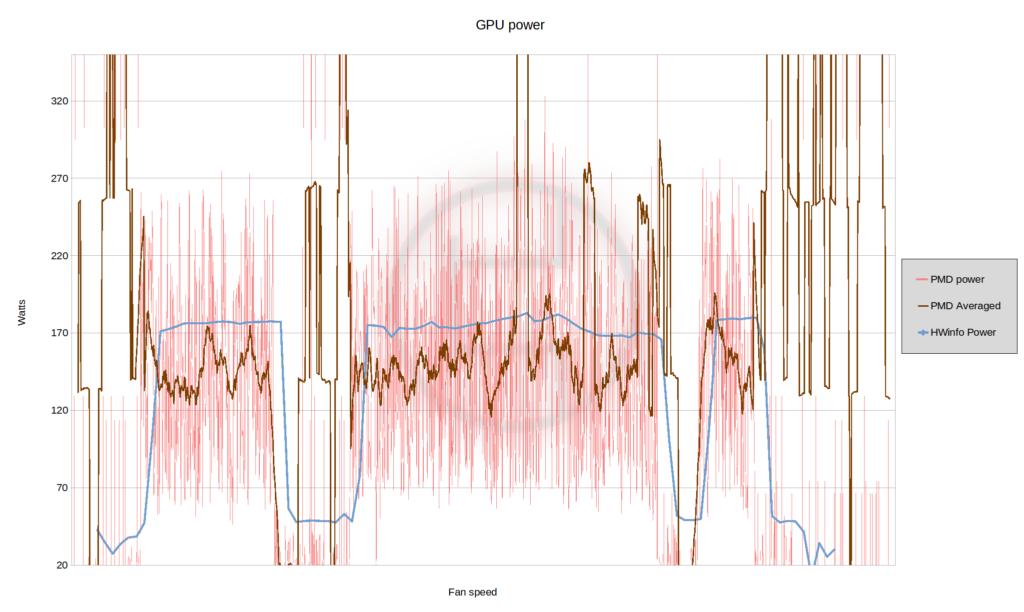

As said before, the GPU has a 190w power limit by default, which resembles the PL with Intel CPUs and their bioses. As of right now, the only value reported by HWinfo is “Total GPU Power” and matches exactly the value reported by Intel’s driver. This makes me confused as i cannot find if this is power for the GPU core only of the Total Board Power.

Regardless, the only measurements i could do are HWinfo reporting’s and measurements at the cables with the Elmorlabs’ PMD. The issue with the last one is that it does not measure power through the PCIe slot.

Before you gauge your eyes over this horrible graph, a few details. The PMD measures Voltage and Current to get the power. Sometimes there can be a drop in voltage which make the PMD panic and report… 6000w. Yes i actually have a value over that that isn’t shown because it wouldn’t make sense. Second, the PMD measures power at the cables, before VRM efficiency losses. So it measures more power that what the GPU actually draws.

Given these two infos, the first thing that i notice is that the average line for the PMD puts the power going through the cables at around 160w average under load. With VRM efficiency losses and the theoretical power going through the PCIe slot, it seems that we could fall back on our 180-190w measured by HWinfo with the blue line. That would mean the Intel driver and therefore HWinfo is measuring total board power.

With this info, i now find weird that Intel advertises a 225w TBP but sets it at 190w by default, considering most people will probably not touch the power limit and therefore never get the 225w power draw.

The other interesting part is that the PMD measures spikes well above 225w. Some values reach 270w or even over 300w just on the cables. Even if my PMD can make mistakes, the power is quite a bit more spiky than i’d like. I still think this card should not be an issue with most PSUs from 550w and above if the quality is right, i did not encounter any issues with my 650w unit at least.

Temperatures, fan speed, and a word on Noise

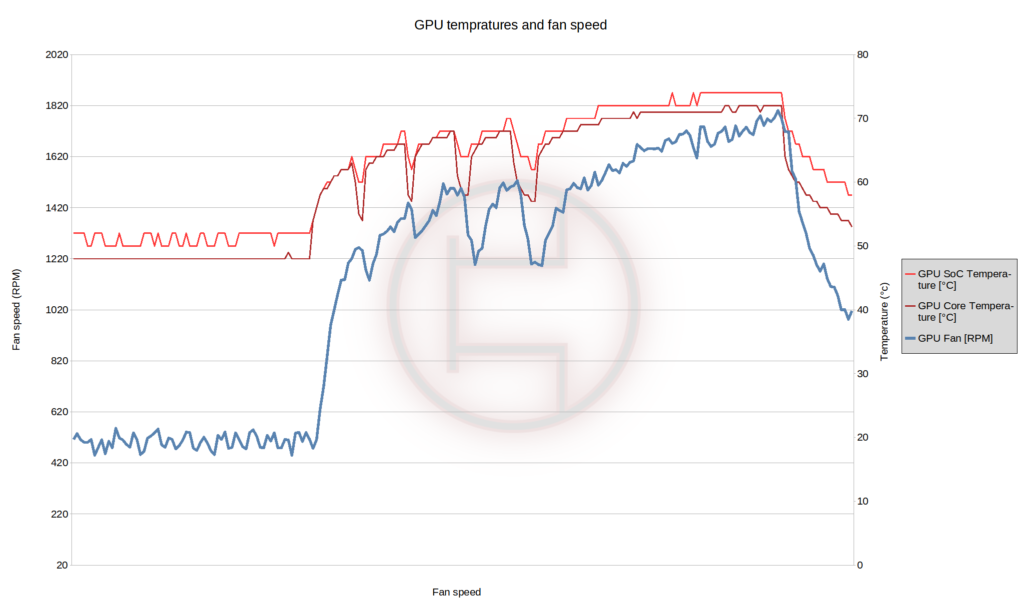

All values are reported by HWinfo running a Cyberpunk77 benchmark. The first thing to note here is that before starting the benchmark, the fans are running – at around 500-600 rpm. I could not get low enough for the fans to stop. Despite temperatures getting below 50c, the fans are still going and never stop. This is honestly fine as the noise generated at 600 rpm is very low and you can often confuse it with the sound of case fans or CPU fans.

The temperatures and fans are climbing correctly under load when i started the game. Even at the peak of the benchmark, we barely reached 75c and i never saw the GPU run past 80c in any situation (granted this is in a well ventilated case with 3 140mm fans spinning at all time).

The fans peaked at around 1800rpm at the end of the benchmark. I find this very reasonable and had no issues with it during my testing. Overall the fan curve is pretty decent. Noise stays contained at lower rpm but still has headroom to climb if the GPU is heating up.

Now sadly i do not have proper equipment to measure noise output, especially with the noise of the CPU cooler near it, so i have no objective values to report here. All i can say is that i never found the fans to be too loud or annoying. The noise levels for me were similar to those of my RX 5700XT pulse : a good balance between fan performance and noise.

What i did hear however, was coil whine. As a reminder, coil whine is a natural phenomenon that is sadly a total lottery. The good news is that it is not dangerous at all and present no risk for you or the hardware. However, despite not hearing much in the case, it was really annoying when i put the card on an open air test bench. The moment i load a game, a screeching sound comes from the card and overlaps the noise both the GPU and CPU fans. It comes and goes when switching between game menus and live gameplay, but when it pops, it is louder and sharper than any other piece on the system.

Oddly enough, this seems to be pure luck as putting it back in the silent base 802 (the test bench uses a whole different set of hardware), the coil whine became much more bearable. As said earlier, its a total lottery, and a lot of parts can affect it. Including the power supply, the board, a potential riser…

State of the drivers ?

This has been a great concern among some people who feared the drivers would be problematic on the first generation. Despite Intel having experience building drivers for integrated graphics on CPUs, dedicated units are a whole different story that Intel themselves underestimated.

Well as it turns out, i have had a relatively normal experience with the drivers for this review. I was able to install it and use ARC Control without trouble, the GPU was picked up correctly after a simple reboot and the overlay was displaying accurate information so far. I have not had any crashes or applications hanging at all, which is a pleasant surprise i will say. I have not encountered issues with fan behavior, artifacts or glitches in game, or any form of instability.

Now, there is definitely room for improvements still. The features are still rather barebones for sure. Tweaking is limited outside of a few OC settings, fan curve cannot be adjusted, and i have not tested all the media features there is for now. I have also some concern about the size of the driver package of 1.2GB, which i consider massive compared to Nvidia and AMD’s packages that are already considered big at half that size if not less.

The RGB control did work as expected by installed the ARC RGB application, which is surprisingly complete. I was able to assign a few colors to the different zones without hiccups. The only issue is the USB cable running around the case which ruins it a bit.

But overall, for a simple user like me that would be doing mostly gaming and that’s it, there’s nothing game breaking so far to the point of throwing it back on the shelves. This is still a positive point in my book, a limited but functional driver is still better than a driver borked everywhere.

In conclusions

Let us unpack all of this Data quickly : Intel is not competitive in Europe, plain and simple.

The card itself is well made and the hardware itself is fine. Aside from coil whine, noise is contained, temperatures are in check, the specs are not outdated or anything despite the delays Intel faced. The issue comes down to performance.

In gaming at 1920×1080, I would just buy an RX6600 for at least 100 euros cheaper. The gap is too big to consider it an attractive option and there is really no feature to compensate for it other than “it’s Intel” if you’re into that. At 2560×1440, it is still expensive even if a bit better. It shows more consistency in the results and could face the 6600XT if it was at the right price.

Now that I have it, i will most likely upgrade my RX 5600XT with it, unless it also makes horrendous coil whine in my rig too. Despite being bad value, it still performs better after all.

Now, would i qualify this launch of a disaster ? absolutely not. Despite horrible pricing here, it is slightly more attractive in the US where it retails for 349$. The driver is actually “fine”, or at least it was for me who doesn’t tinker too much with things and keep my PC relatively clean on the software side. And still, the card is well made physically. It isn’t a blower disaster or an overheating mess, is isn’t a cheap pile of crap parts poorly made together or anything. It’s a reasonable card, which is desperately trying to take on some space in between two giants that have been fighting each other for over 20 years now.

I do wish for ARC to live and improve, one can never get too much competition. For now however, this looks more like a card for enthusiasts that want to poke around it than a card you’d recommend to the average Joe gaming only on the week ends or a bit after work.