Introduction

Nvidia hosted the GTC 2022 event this week, March 21st to the 24th. There weren’t any consumer releases, but the enterprise releases were just as interesting. Most notably, they released the Hopper architecture for GPUs and Grace architecture for CPUs.

Hopper Joins the Nvidia Lineup

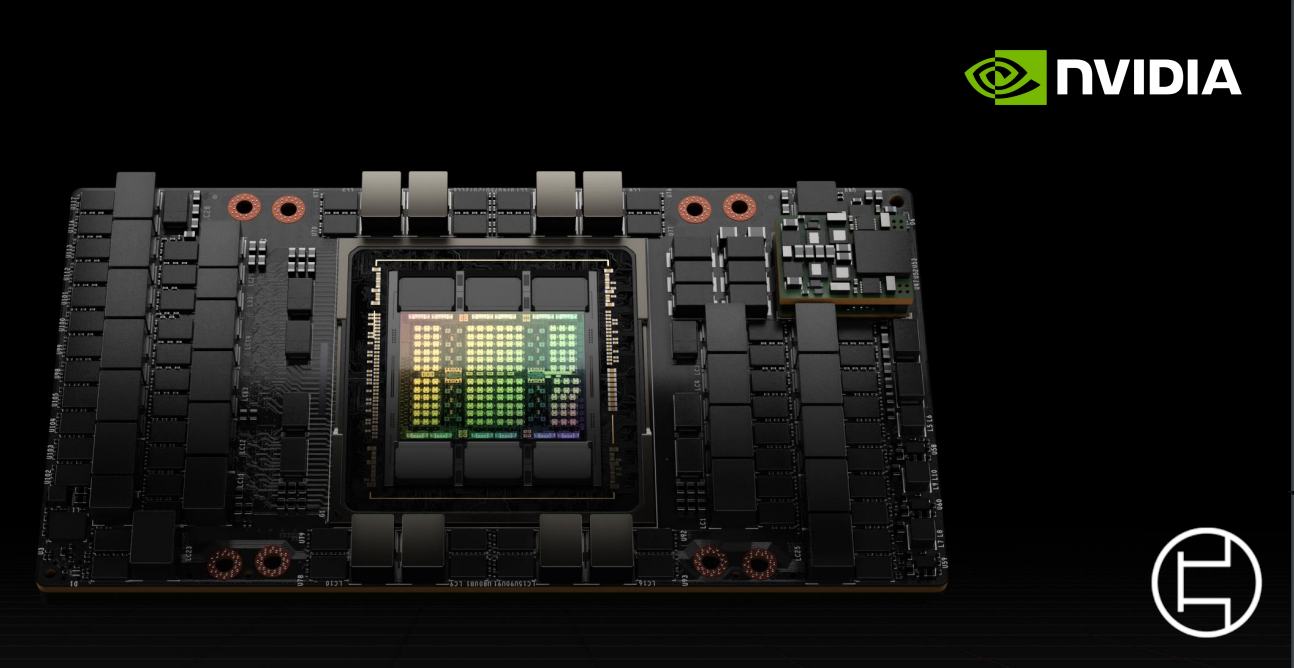

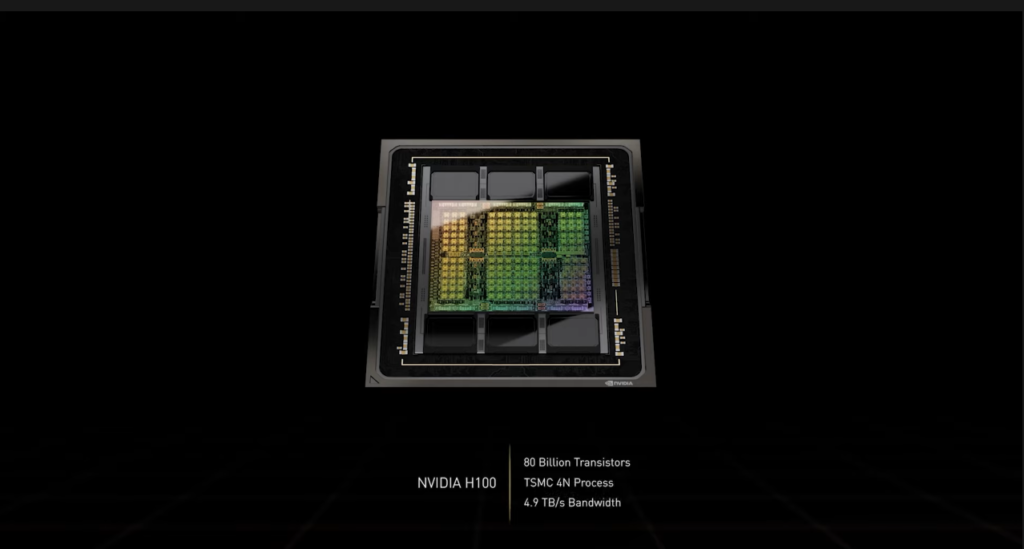

The first and most interesting product that Nvidia revealed at GTC this year was the Hopper architecture. They also showcased the H100 and accompanying DGX servers. According to Nvidia, the H100 will have up to a 30X boost in performance compared to the A100 in AI tasks. The H100 features a staggering 80 billion transistors built on the TSMC 4N process. It is also the first to use HBM3 memory, helping to lower latency between die and VRAM. The Hopper architecture is also the first to utilize PCIe Gen5. The H100 is expected to release in Q3 of this year.

Nvidia H100 CNX

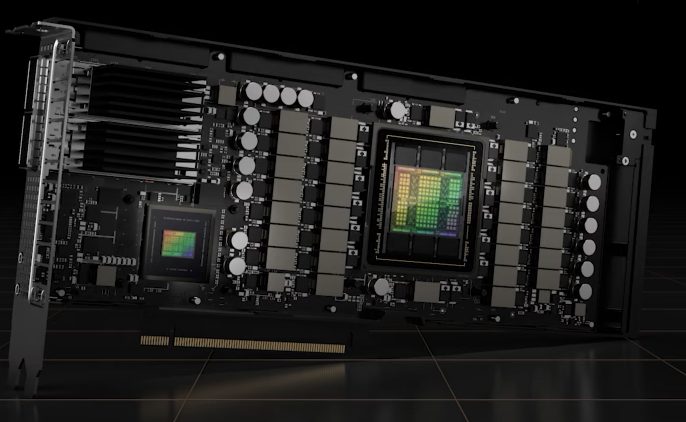

Just like last year, the H100 will have a PCIe counterpart. The CNX has an identical chip to the H100 but the board is physically connected to a ConnectX-7 network module.

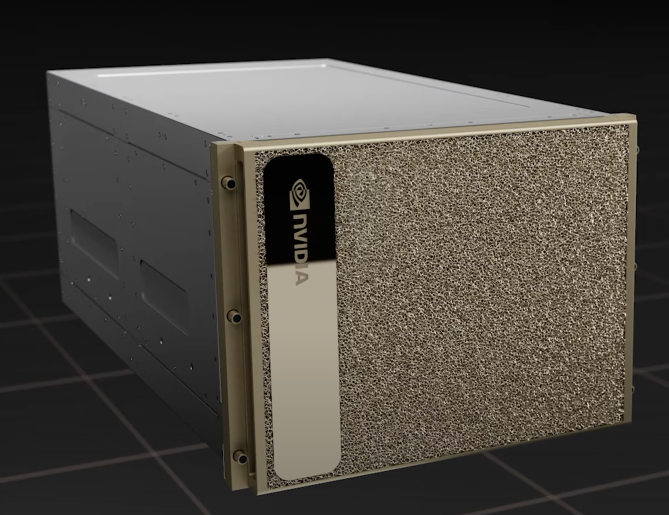

New Nvidia DGX

Similar to the Ampere DGX, the new Hopper DGX will feature 8 H100s, 4 NVLink switches to connect them together, 2 new ConnectX-7 network modules, and 2 Intel Ice Lake processors. Below are the other specs that Nvidia has provided.

- 8 H100 GPUs totalling 640 billion transistors

- 32 petaflops of computing power

- 640 gigabytes of HBM3

- 24 terabytes per second of memory bandwidth

Take these numbers with a grain of salt, as these are mainly marketing values and may not be indicative of the final product.

These DGXs can also be connected to form a pod using a physical NVLink switch. These pods can, in turn, be connected to form a supercomputer or database using their Infiniband technology. Nvidia called these connected pods an EOS. These EOS clusters are expected to reach over 1 exaflop of computing power.

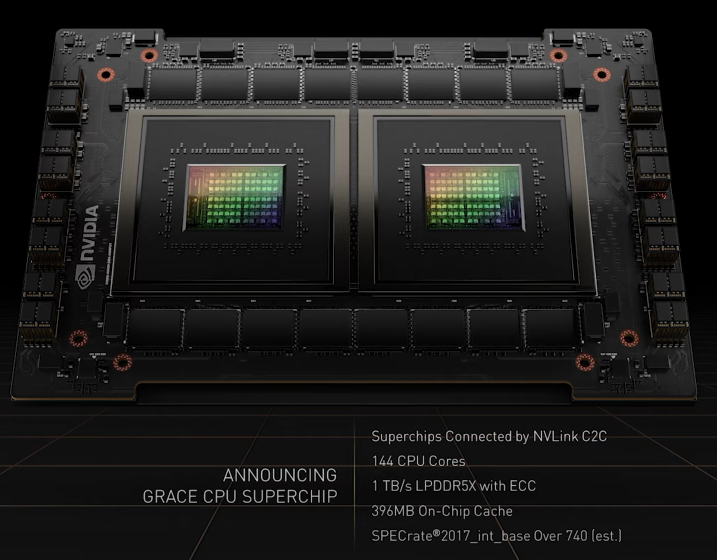

Grace Joins Nvidia Lineup

The next reveal from Nvidia is the Nvidia Grace CPU. It is a combination of two Grace CPUs put together using NVLink to make a module containing 144 ARM-based cores. We expect it to be incredibly powerful, so keep an eye out for more information. Further specifications are below:

- Connected via NVLink C2C

- 144 CPU Cores

- 1 terabyte of ECC LPDDR5X

- 396 megabytes of cache

Other Nvidia Reveals

Nvidia improved on its deep learning and AI architectures and openly encourages people to join them. It improved heavily on its robotics sector, especially in self-driving. This year, there was also an emphasis on the Nvidia Omniverse and digital twin. Nvidia’s team hopes to be able to recreate Earth and make a functioning weather model to help us better understand our world in the past and how to protect it in the future. There were many other reveals and webinars. If you would like to watch the full keynote, it is here. In the case that you are interested in watching more in-depth webinars, you can still register here. If you would like to see our other coverage of reveals like this, click here.