Shortly after our Intel ARC A770 16GB Edition review, I began working on the A750, which is the “cheaper” version as I call it. This is the second graphics card Intel released in October this year which looks like a slightly cut-down A770. However, I was worried from the start regarding the performance difference between the two cards considering the rather large price difference.

This review will be comparing the two as well as other cards from AMD and Nvidia to try and get an idea of what is worth it and at which price point. As a reminder, this card was funded and bought by ourselves directly. All the results here are those obtained in our own benchmarks.

The ARC A750 GPU

This is the second “reference” design from Intel, which is essentially the same design as the A770 without the RGB. The specs, however, are slightly different :

| Graphics Card | ARC A770 | ARC A750 |

| Codename | Alchemist | Alchemist |

| Lithography | TSMC N6 | TSMC N6 |

| Graphics Clock | 2100 MHz | 2050 MHz |

| Graphics Memory | 16 GB GDDR6 | 8 GB GDDR6 |

| Memory Interface | 256 bit | 256 bit |

| Memory Bandwidth | 560 GB/s | 512 GB/s |

| Memory Speed | 17.5 Gbps | 16 Gbps |

| PCI Express Ver. | 4.0 x16 | 4.0 x16 |

| TBP | 225w | 225w |

| MSRP | 329$ usd | 289$ usd |

The main differences we can see are the slower GPU clock and memory speeds, as well as half the VRAM. The two cards are very similar in all other regards. This very much reminds me of the differences between the RX 5700XT and 5700 or perhaps the more recent RX 6800XT and 6800. Two very similar cards, but in this instance, the VRAM configuration changes a lot more.

Just like the A770, we still have the same 225w TBP which is still high for that class of card. At least Intel did not cut any of the major features with the A750. We still find support for DX12 Ultimate, Vulkan, OpenCL, OpenGL, and encoding/decoding features.

Here is the full product page from Intel.

The Test System

Here is the full system used for benchmarking all graphics cards :

| GPU tested | AMD GPU | Intel GPU | Nvidia GPU |

| CPU | Intel Core i7 12700k | Intel Core i7 12700k | Intel Core i7 12700k |

| Motherboard | MSI Z690 Tomahawk WiFi DDR4 | MSI Z690 Tomahawk WiFi DDR4 | MSI Z690 Tomahawk WiFi DDR4 |

| Bios version | 1.80 | 1.80, 1.90* | 1.80 |

| Cooling | Arctic Liquid Freezer 2 360mm | Arctic Liquid Freezer 2 360mm | Arctic Liquid Freezer 2 360mm |

| Ram | 2×8 GB Crucial Ballistix 3600MT/s CL16 | 2×8 GB Crucial Ballistix 3600MT/s CL16 | 2×8 GB Crucial Ballistix 3600MT/s CL16 |

| GPU driver version | 22.10.3 | 31.0.101.3802 | 526.86 |

| Storage | Samsung 970 Evo Plus | Samsung 970 Evo Plus | Samsung 970 Evo Plus |

| Case | Be quiet! Silent Base 802 | Be quiet! Silent Base 802 | Be quiet! Silent Base 802 |

| Power supply | MSI A650GF | MSI A650GF | MSI A650GF |

*Due to a driver issue, a single benchmark was run on bios 1.90. This only affects the A750 on Red Dead Redemption 2. More information later in this review.

All benchmarks are done on a Windows 11 22h2 installation with all the included updates. DDU is used when changing GPU vendor to ensure no driver conflict with each other.

Here are the different graphics cards used in this review :

Intel ARC A770 Limited Edition

Intel ARC A750 Limited Edition

Nvidia RTX 3070 Founders edition

Sapphire RX 5700XT Pulse

PowerColor RX 5600XT ITX

MSI RX 460 ITX (Note : this card has been deshrouded and cooled with a Noctua NF-A9x14 plugged into the motherboard as the original fan died).

All cards have been benchmarked at both 1920×1080 and 2560×1440 resolutions. Each value represents an average of at least 3 or more runs (depending on the game.) VSync is always disabled.

All hardware is running at stock settings except for the RAM running at XMP at Gear 1 and the CPU running without power limits. Two separate EPS cables have been used for CPU power and two separate PCIe cables have been used for GPUs that require more than one.

The Intel A750 has a 190w default power limit by default, which was not changed for this review.

Gaming Benchmarks

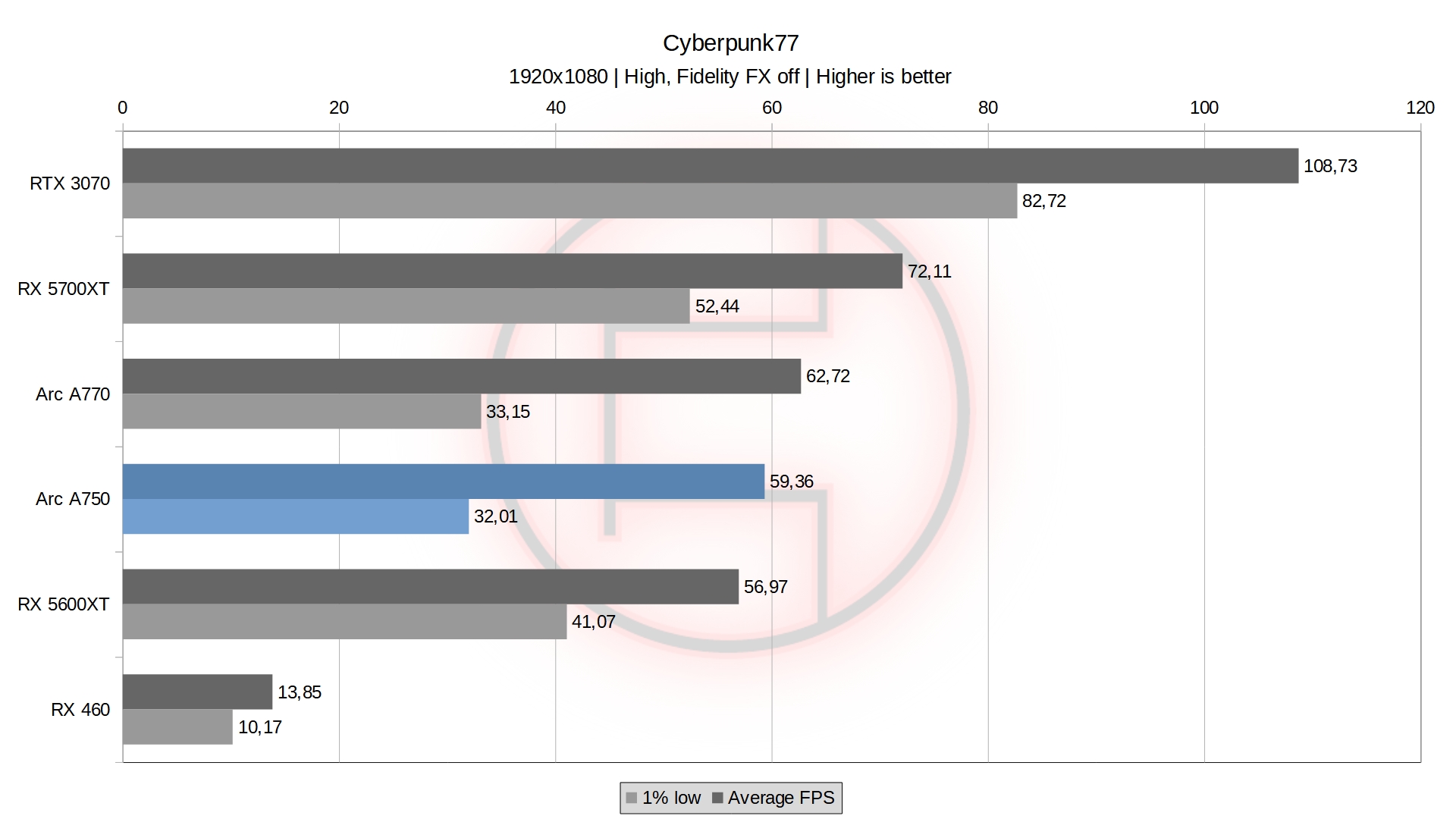

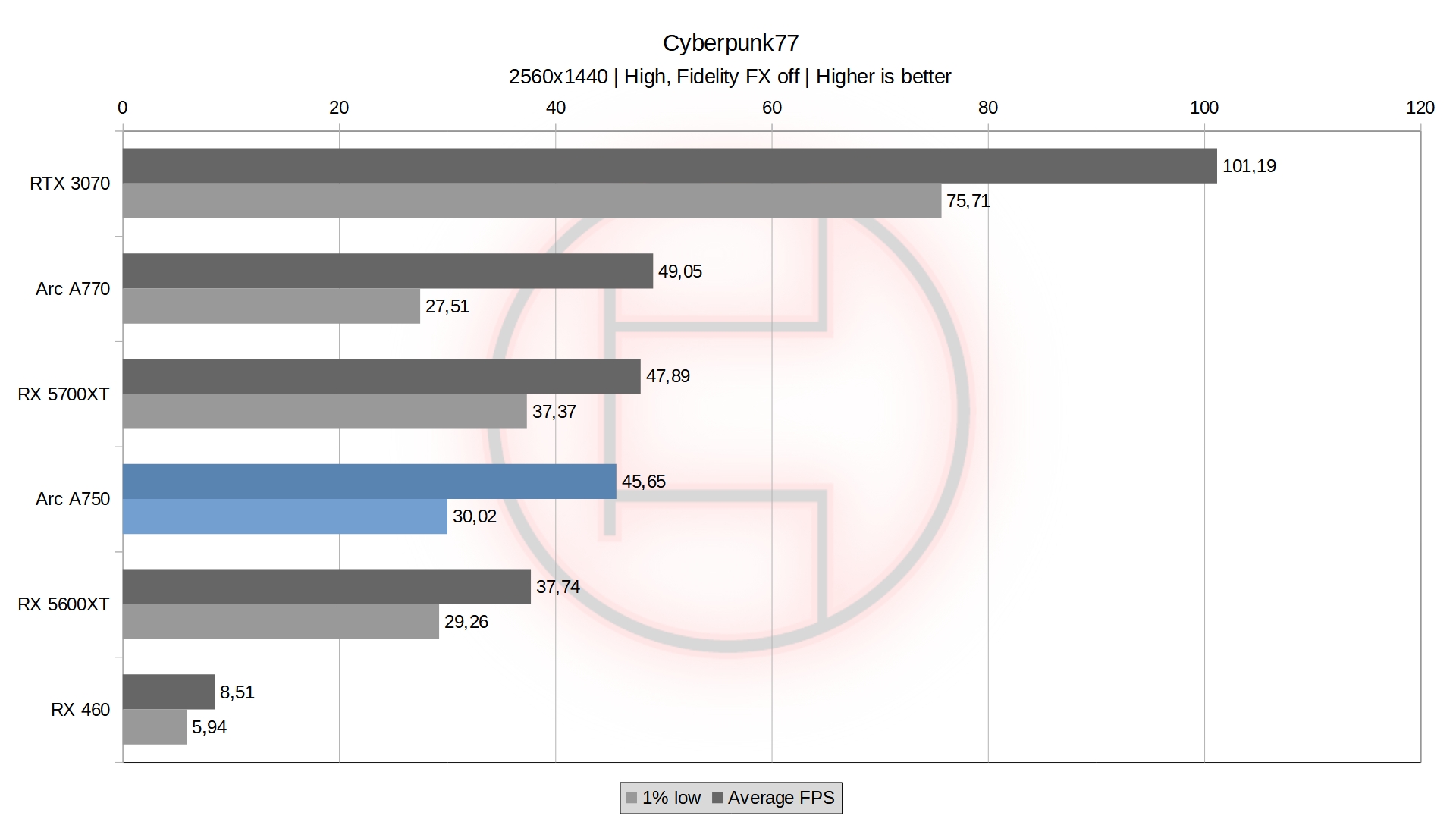

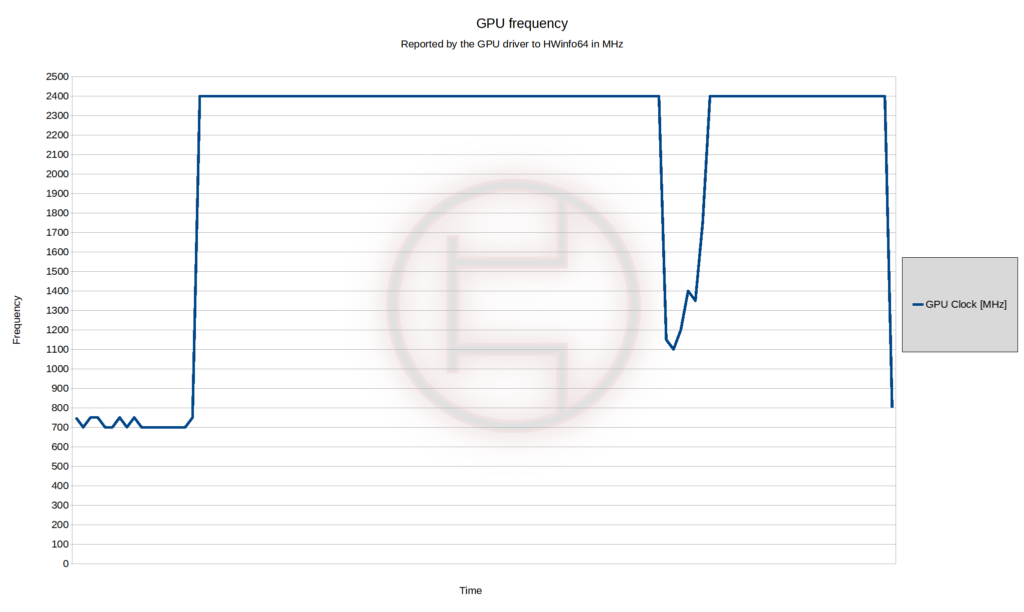

Just like the ARC A770, the 2050 MHz advertised clock is not very useful as running a Cyberpunk77 benchmark sees the GPU boosting all the way to 2400 MHz. I can also confirm this was the case for the entirety of the review. This means the difference in the GPU clock between the A770 and the A750 is not longer one in gaming as both boost the same.

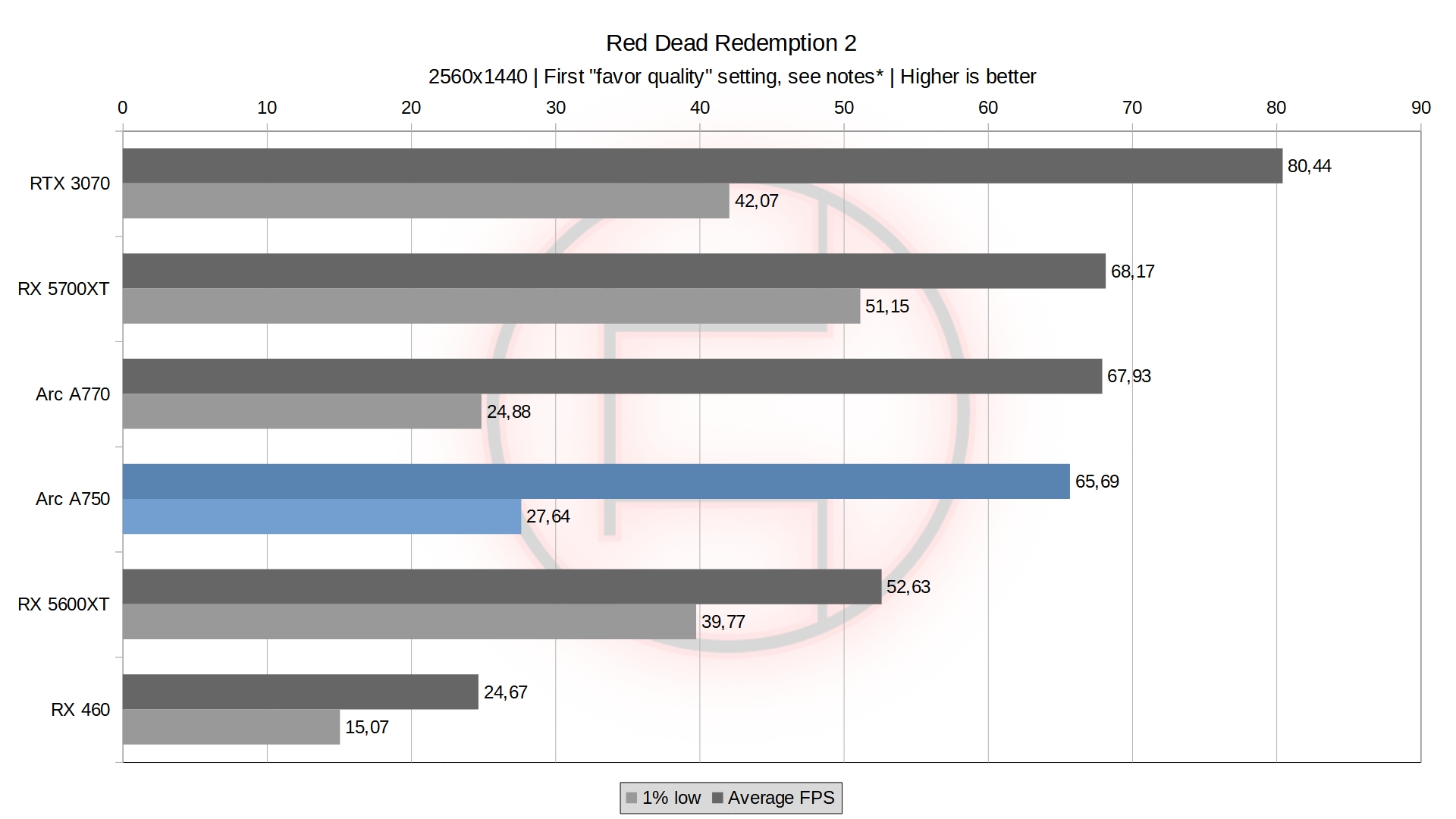

1920×1080 Benchmarks

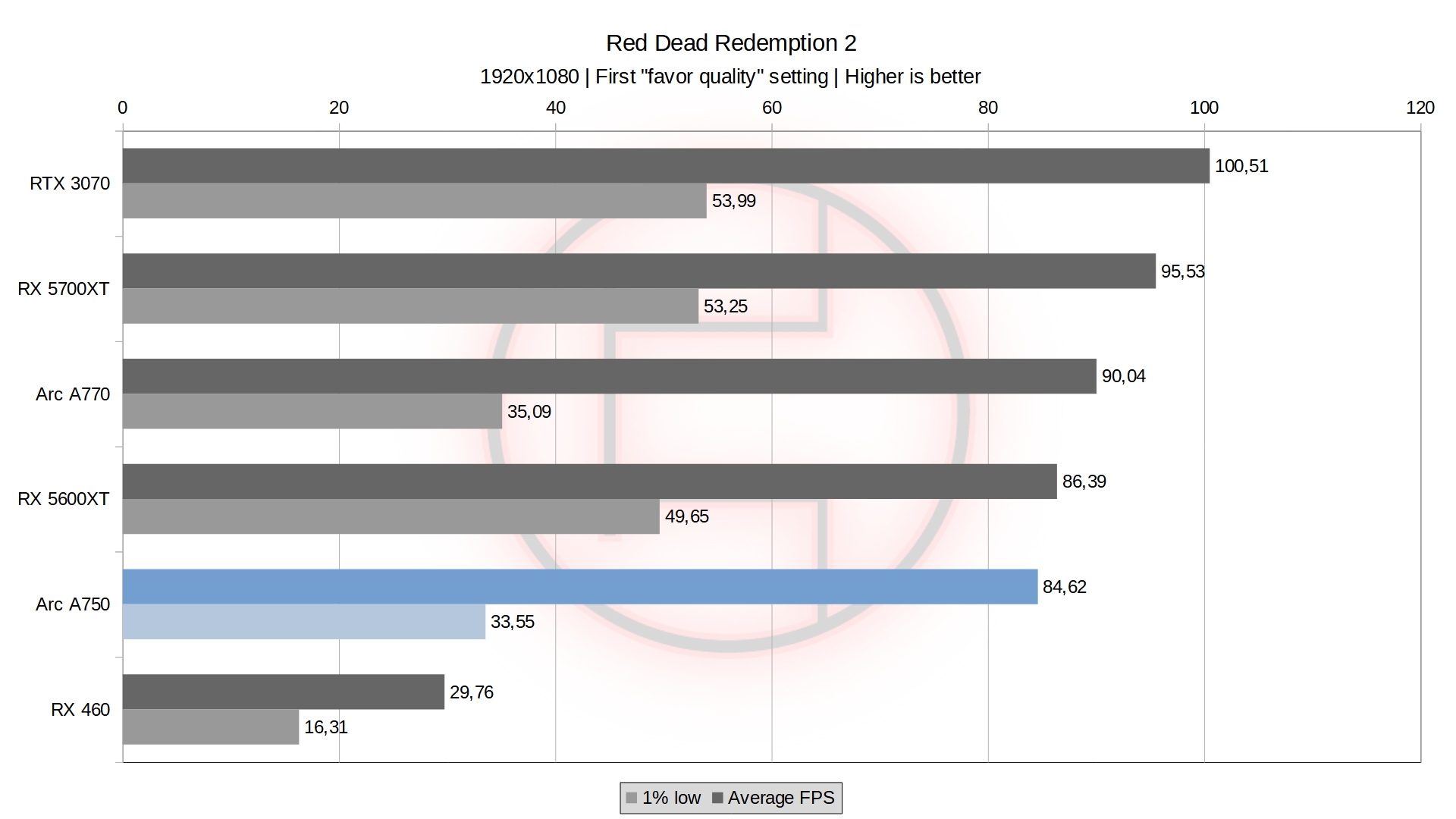

The first note here is about the driver issue. I encountered my first ARC driver issue with Red Dead Redemption 2 which would crash while running the in-game benchmark. The crash would always appear at the same time (almost the same frame every time), despite running DDU multiple times and re-installing the drivers. Verifying the game files did not help, and neither did re-installing. I would get a hard BSOD pointing to a video/graphic issue which we confirmed to be the driver thanks to the dump created by Windows. In a desperate attempt to fix it, I updated the bios to the 1.90 version of the board to hope it would work better. This sort of worked as I was able to get one benchmark to complete, but only one. This result is sadly the only one I could get in the middle of all crashes. I still present it as it is somewhat consistent with other results, but it should be taken with a grain of salt.

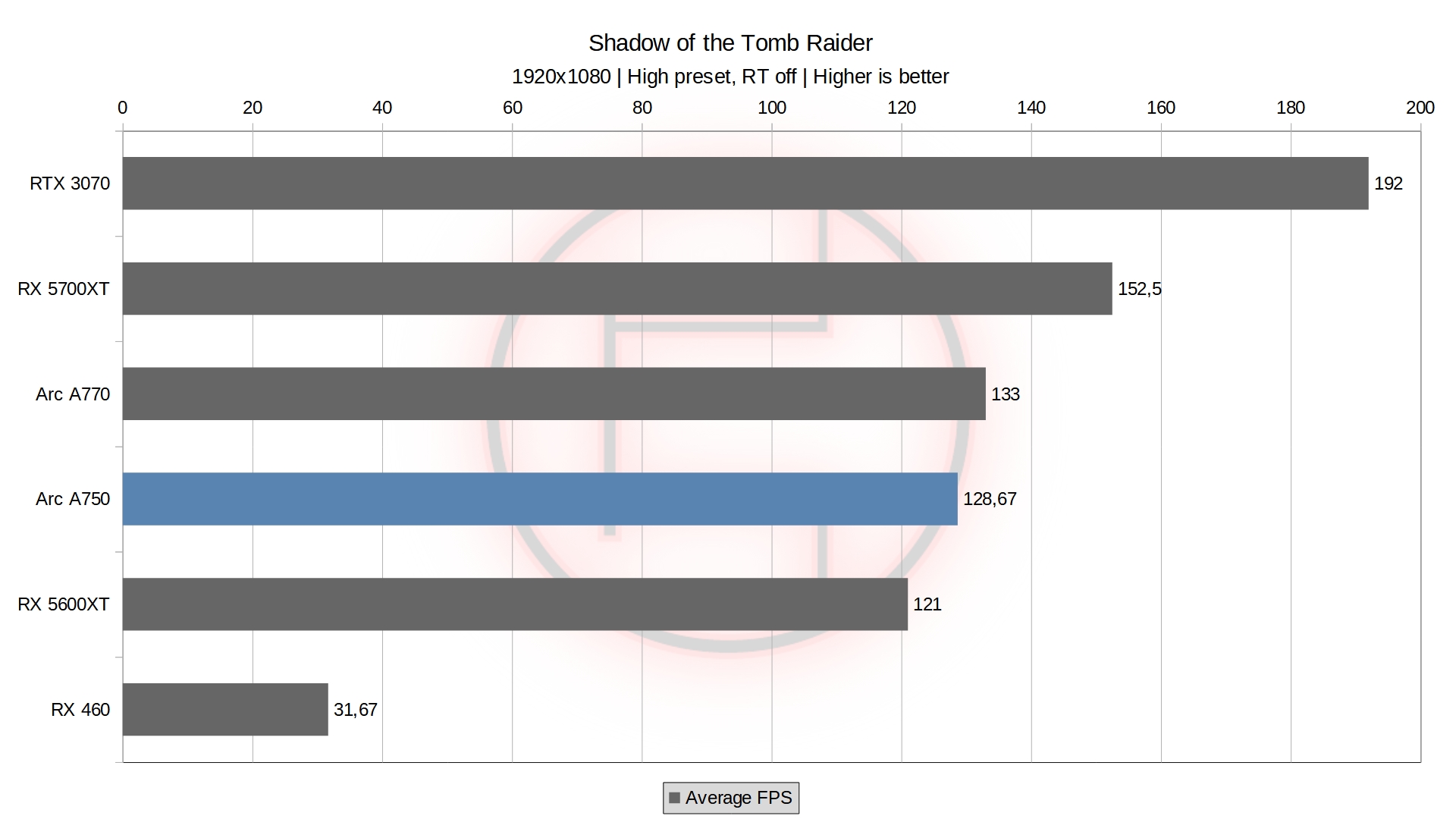

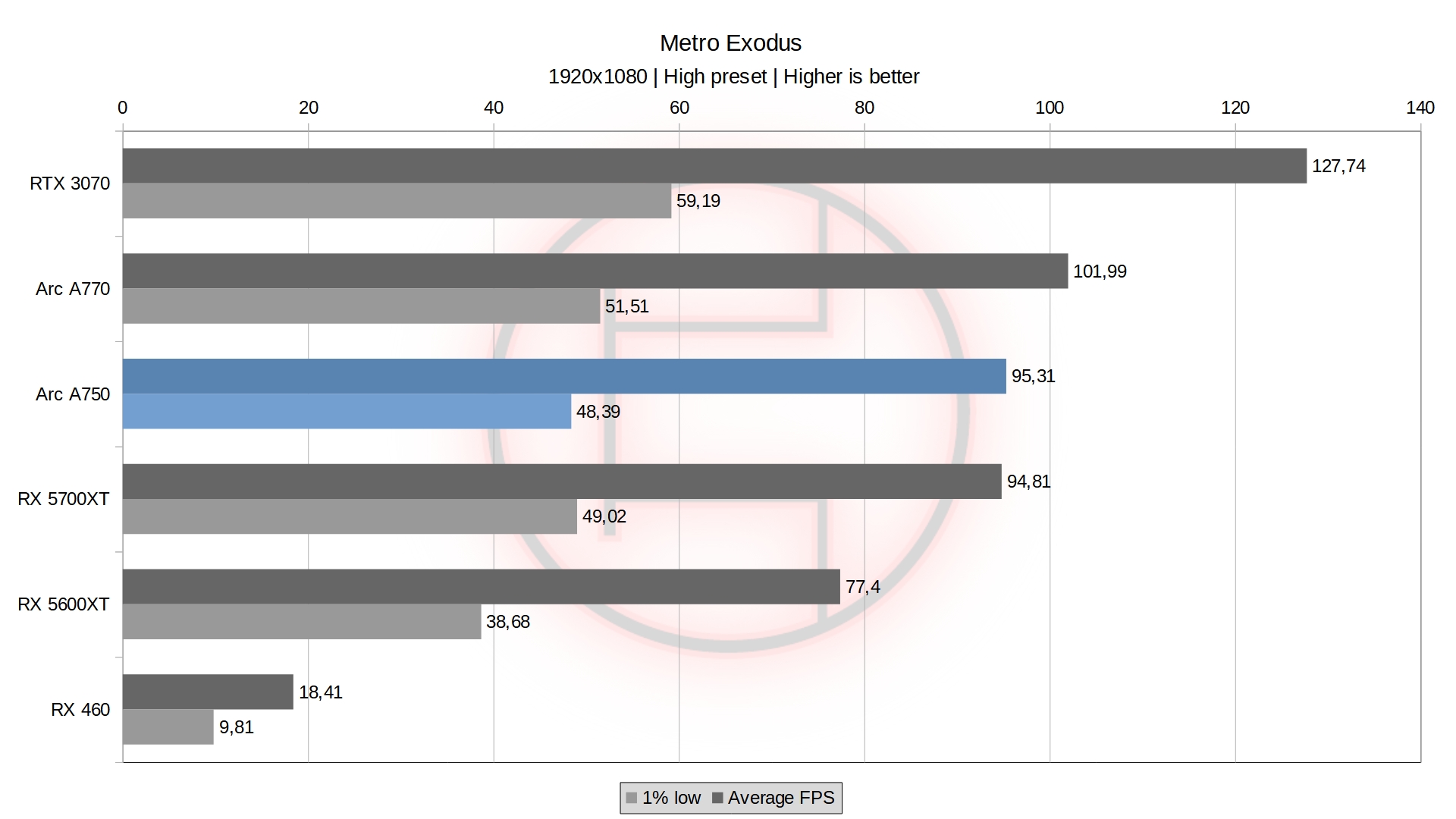

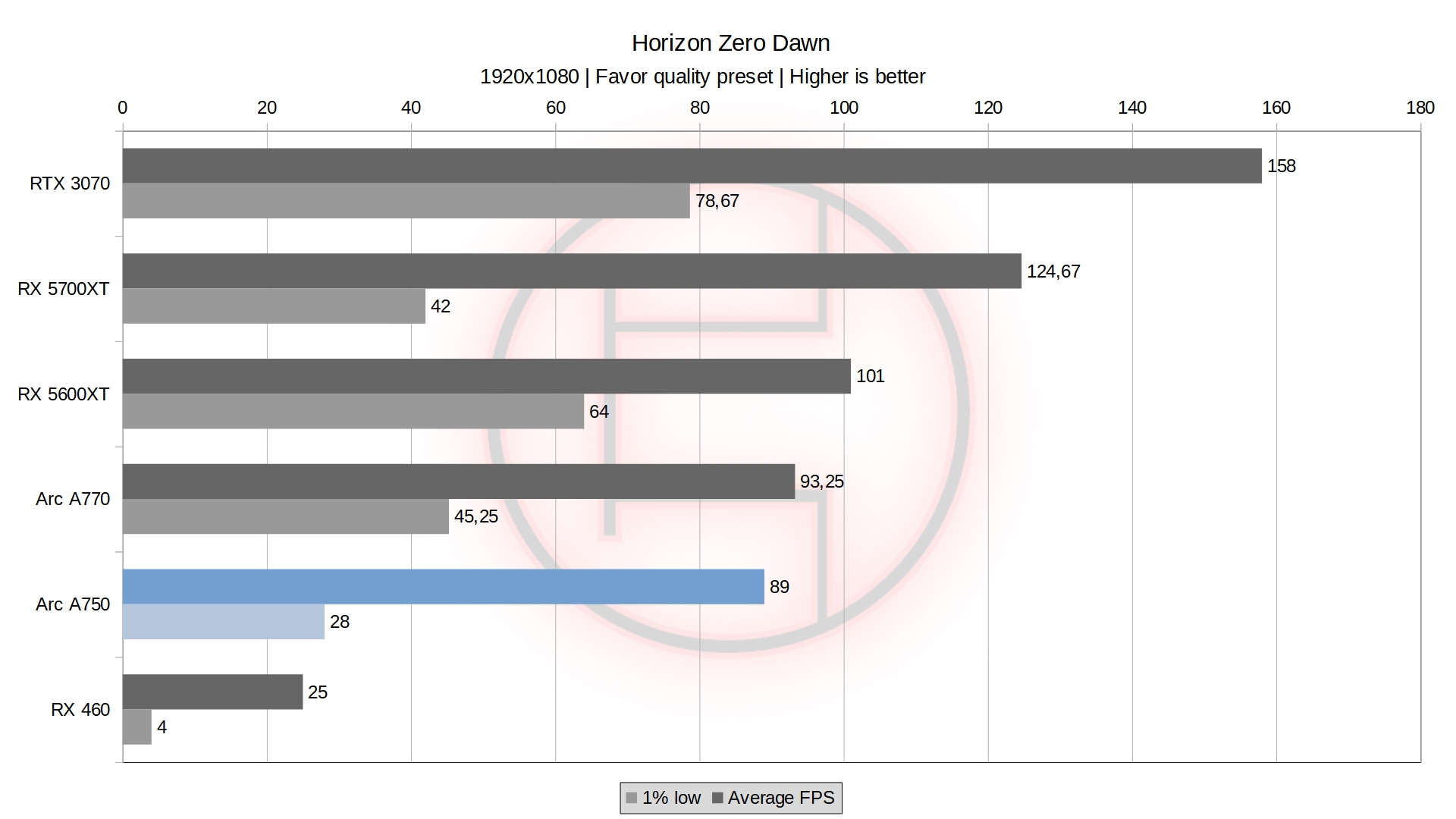

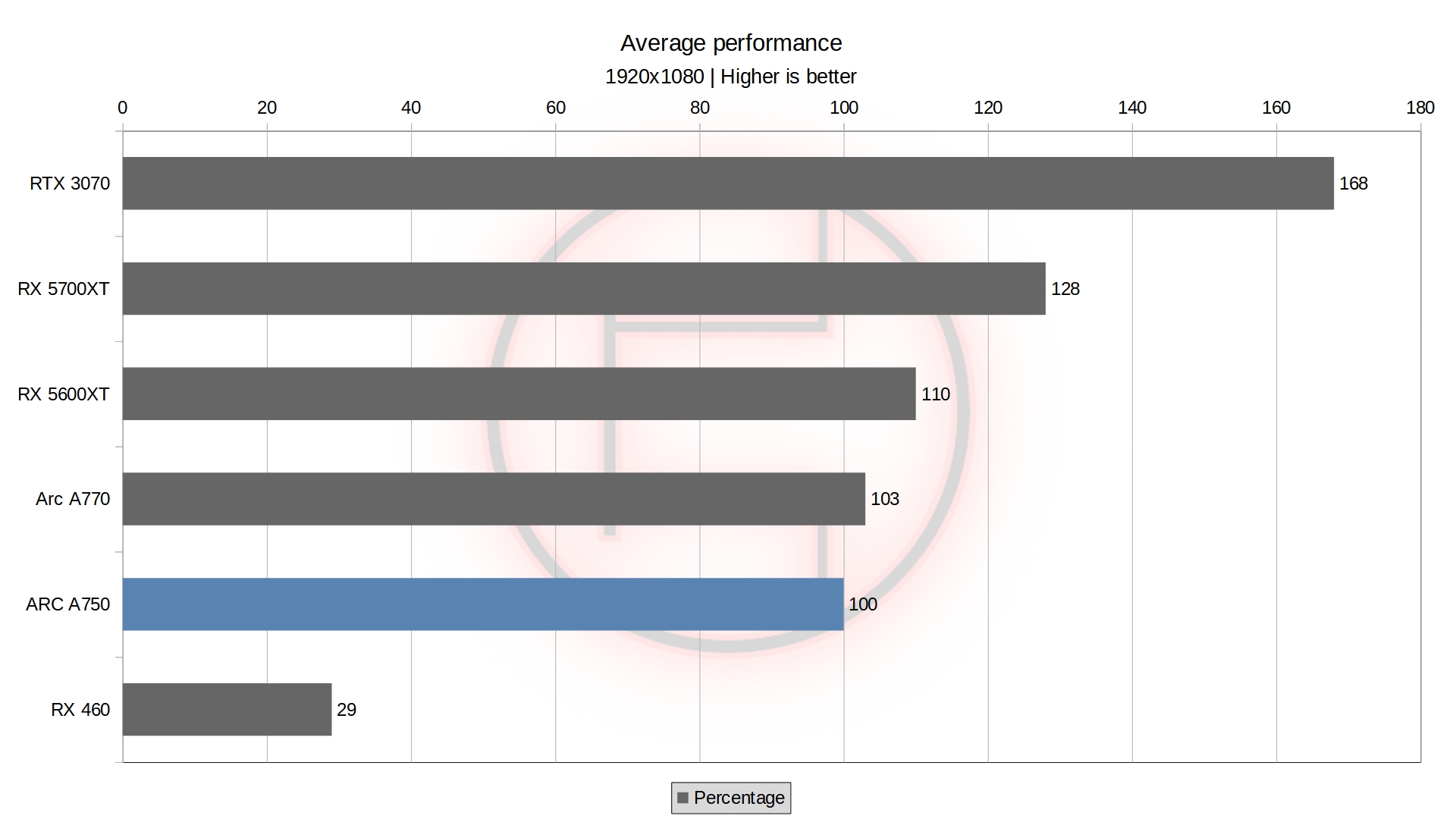

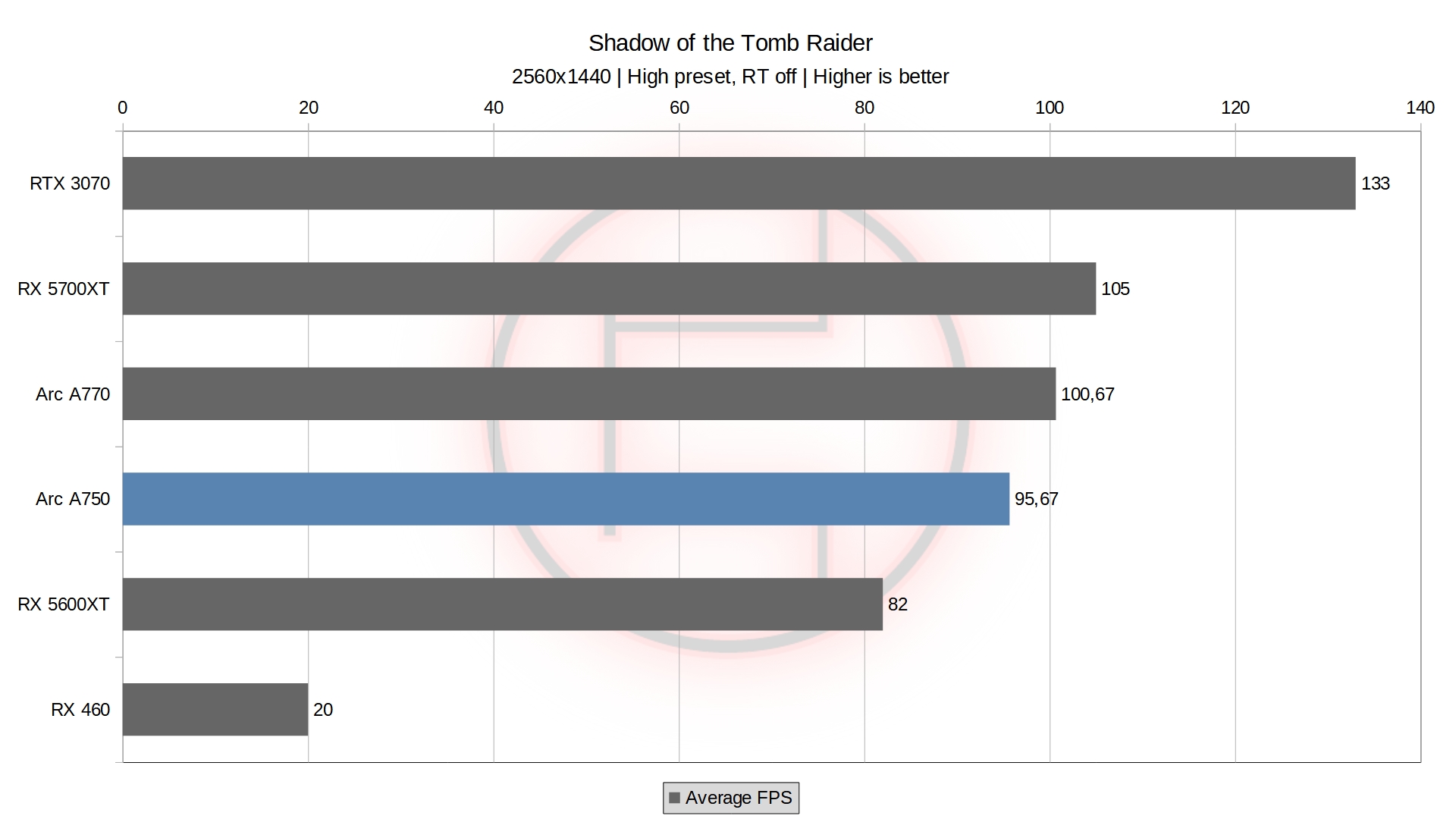

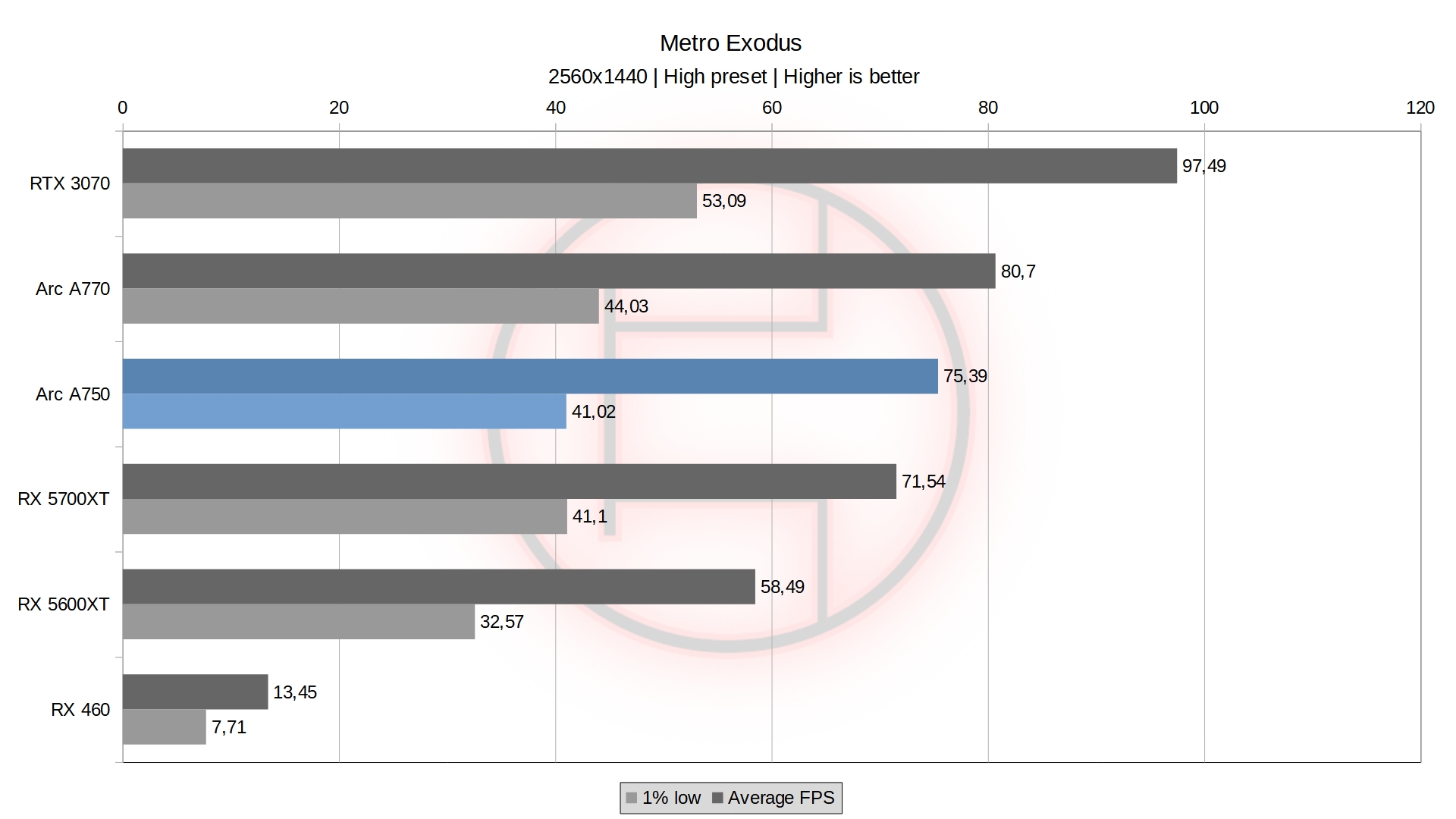

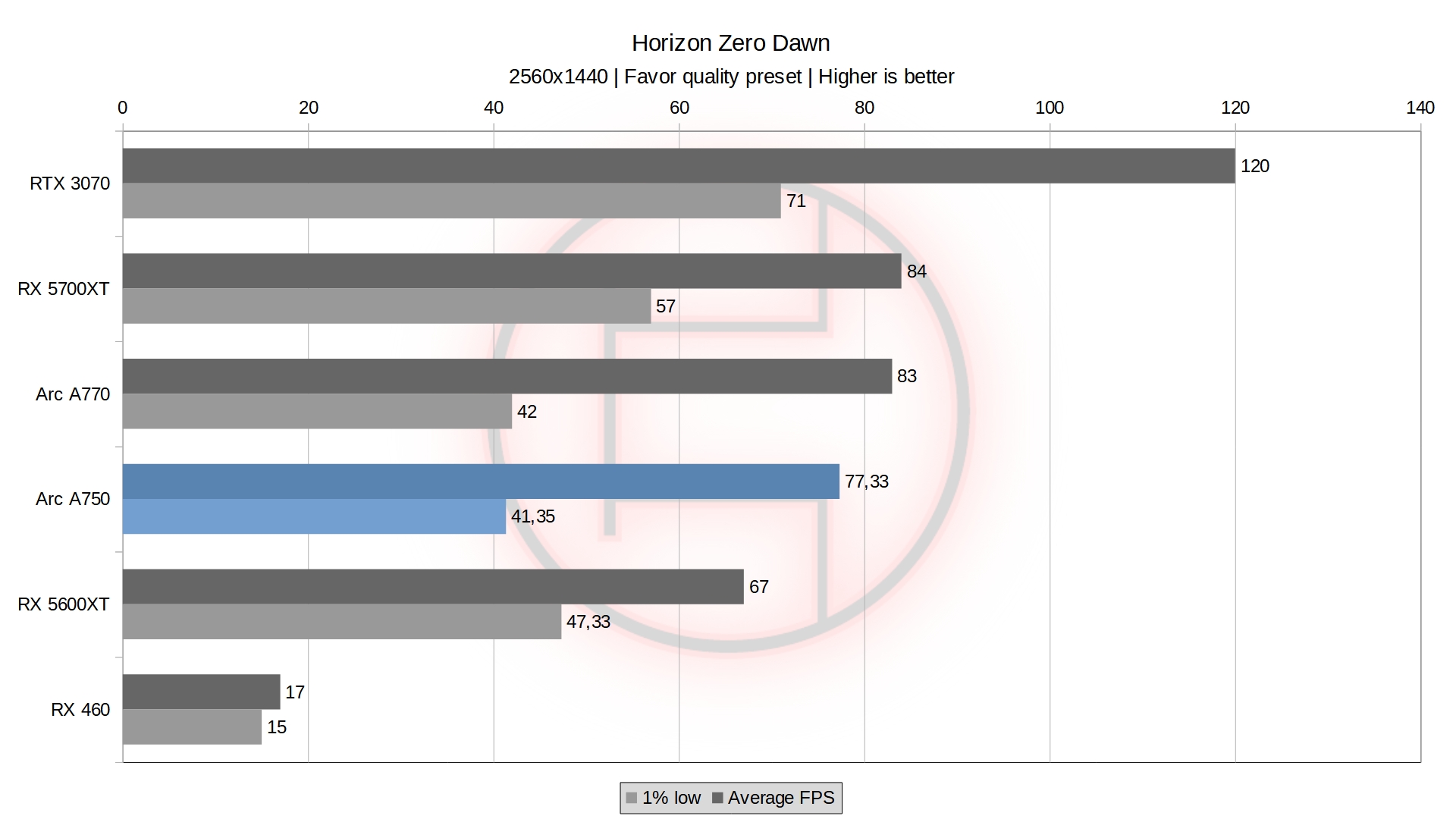

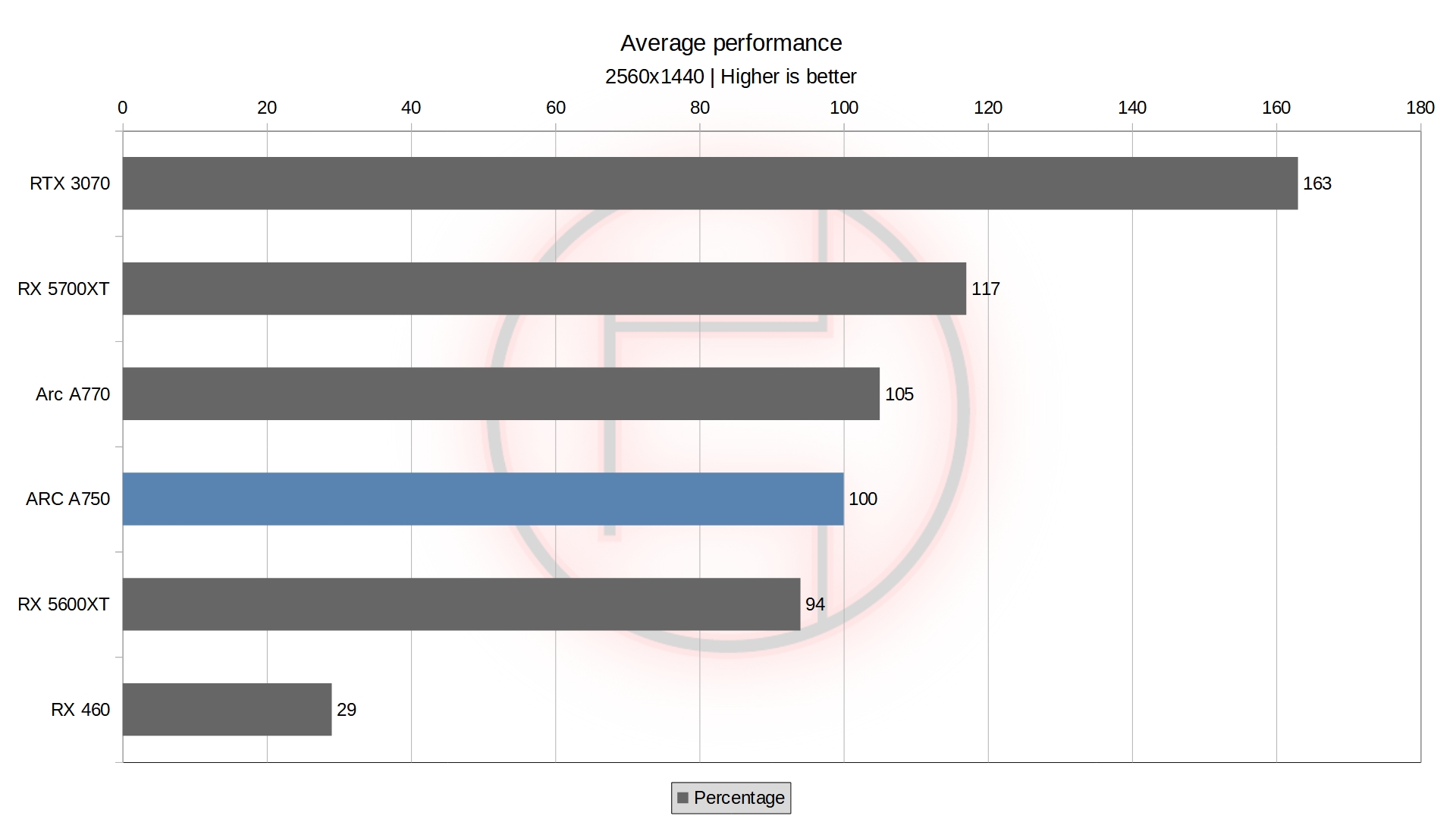

We still see very inconsistent results depending on the games. Just like the A770, it can outperform both the RX 5600XT and the RX 5700XT or fall behind both. However, the most notable aspect of the A750, is how close it is to the A770 in general. There is little to no perceivable difference between the two cards, so much so, that on average the A750 is only 3% slower. Considering the 380 euros I spent on the card, it makes the A770 look like a terrible investment. This makes the A750 slightly more competitive here when compared to other cards.

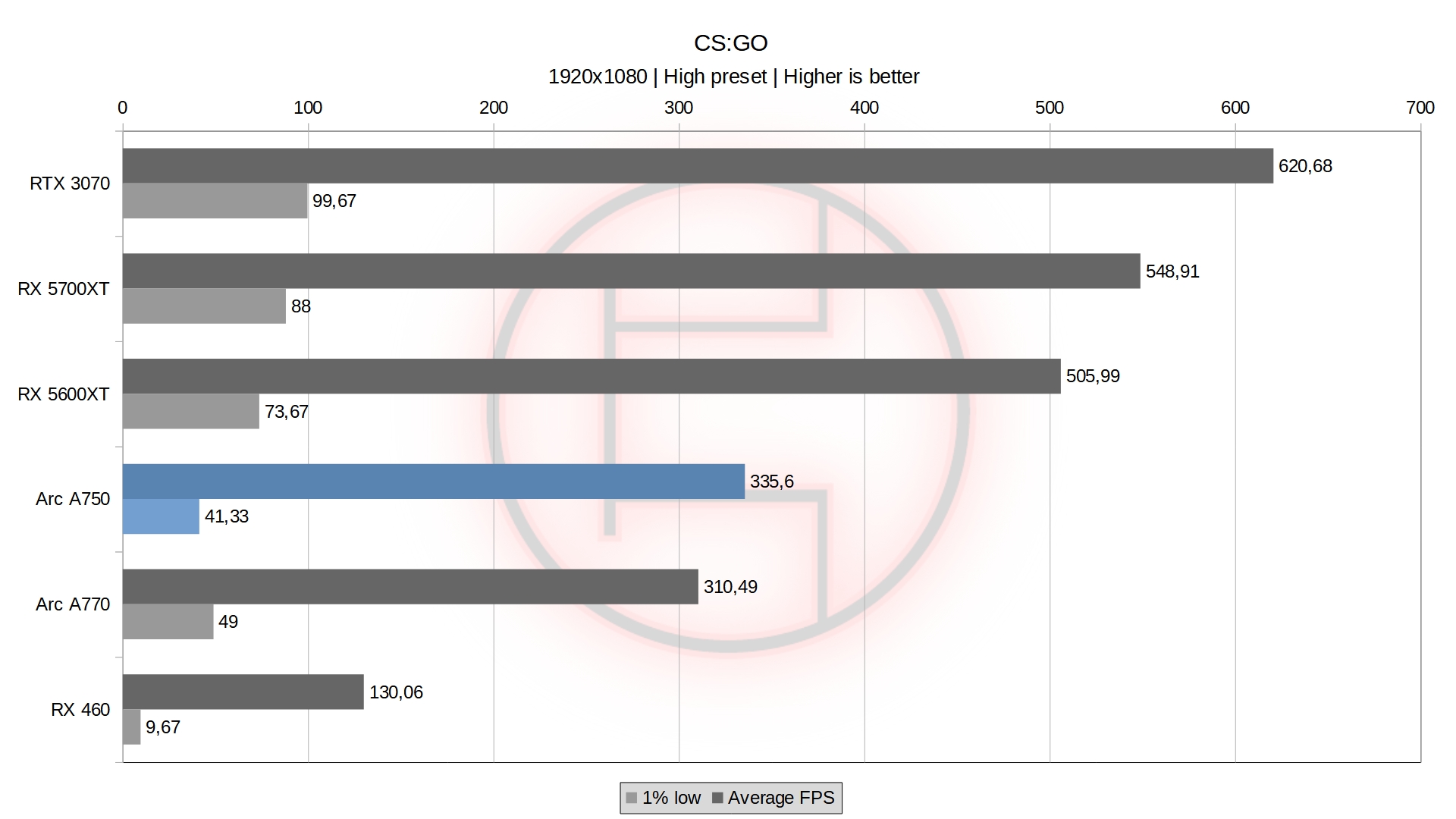

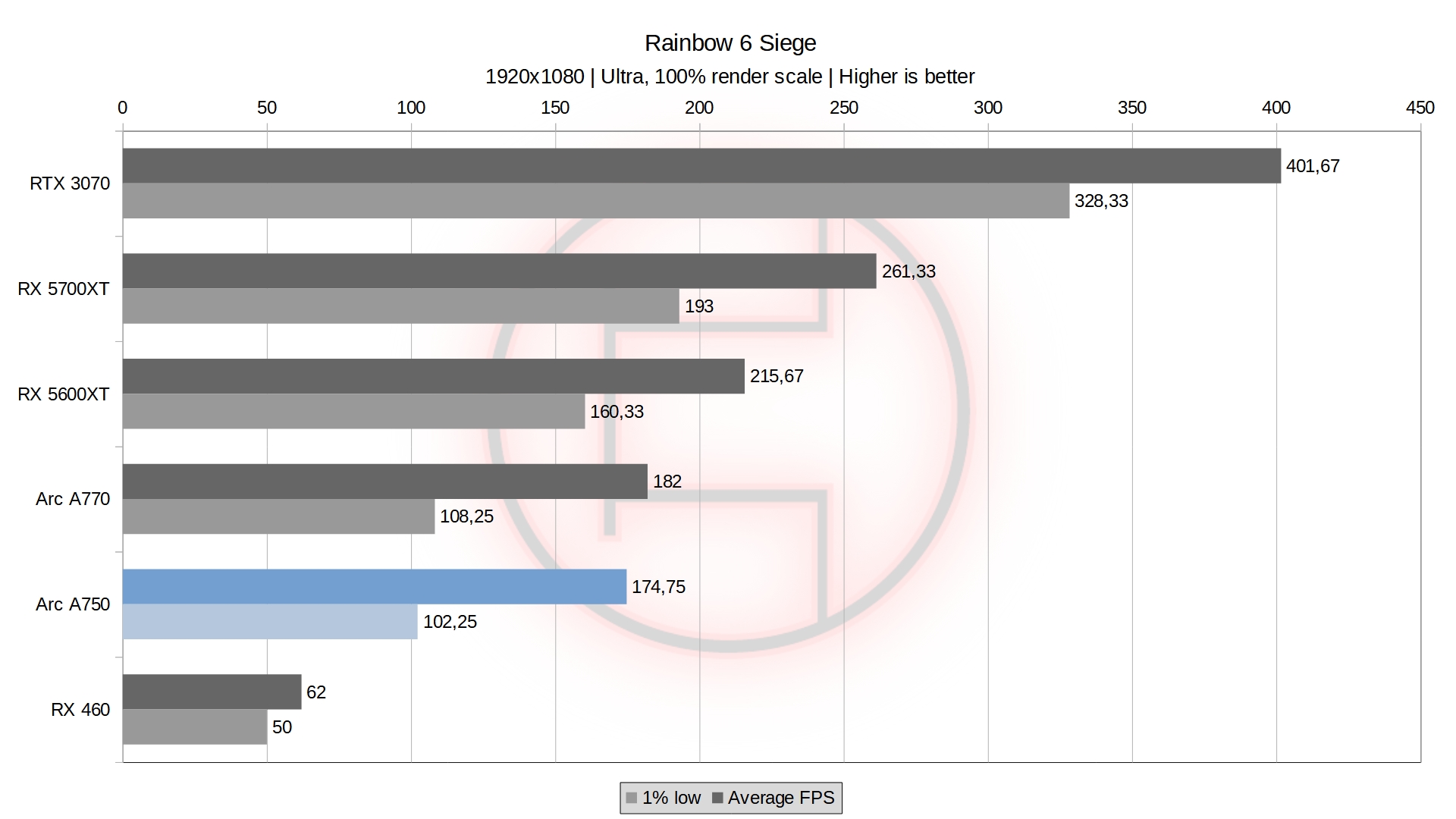

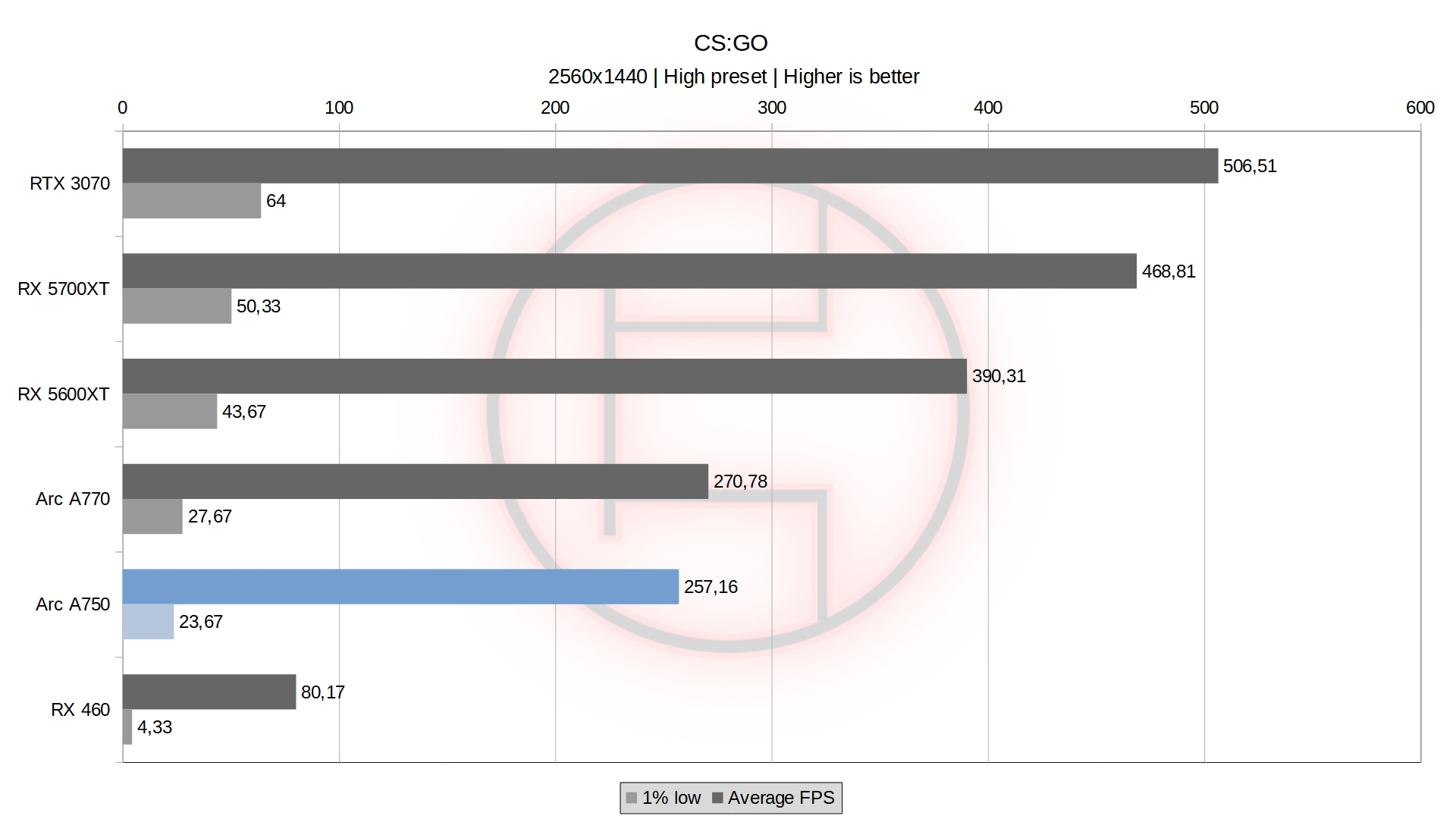

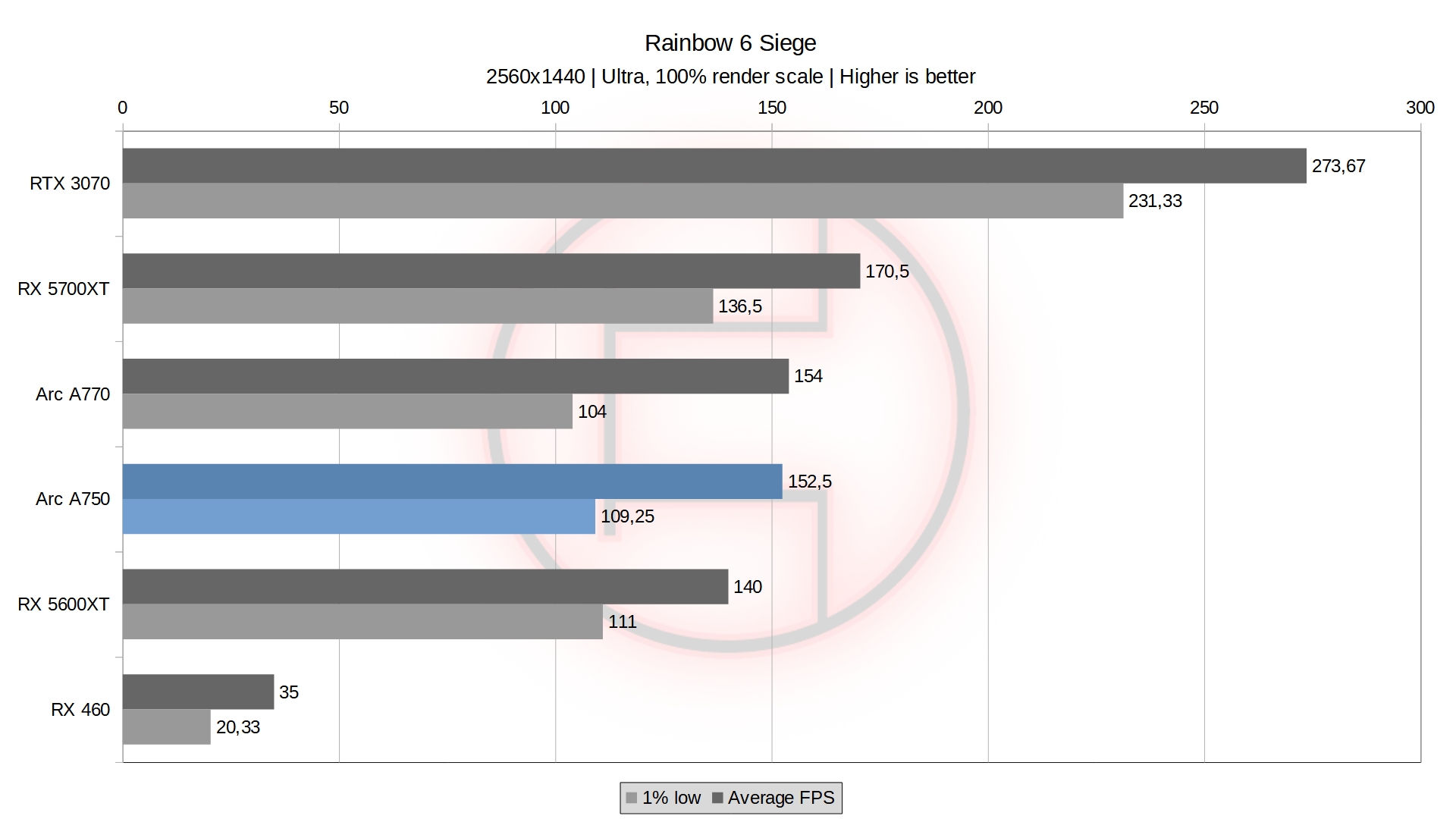

Interestingly enough, if we forget about the CS:GO results, which is where ARC is the most behind, the A750 is only 4% behind the RX 5600XT at 1920×1080. Going one step further and ignoring the Rainbow 6 Siege results, the A750 is now reaching the same performance as the RX 5600XT and the A770 surpasses both by 5%. The gap between the A750 and the RX 5700XT also drops down from 28% to 16%. This shows that CS:GO and Rainbow 6 Siege are two games that severely under-perform with ARC GPUs, mostly due to their older API setup.

Now, that still doesn’t make ARC look too good here considering I paid 380 euros for the A750 when I paid over 300 euros for my 5600XT almost 2 years ago now.

2560×1440 Benchmarks

Just like at 1920×1080, I did encounter the same driver issue in Red Dead Redemption 2. I was again able to have only one complete benchmark, the rest of the runs kept crashing at the same frame. The result is once more presented because I believe it is consistent enough despite being the only successful run.

At 2560×1440, we see a similar trend. The A750 is just behind the A770 by a small margin, making itself the obvious value choice of the two Intel cards. The RX 5600XT is now beaten in most games, with the exception of CS:GO (which is still expected). Despite having only half the VRAM as the A770, it still somewhat scales with higher resolutions and bridges the gap with the RX 5700XT.

However, the difference between the A770 and the A750 also slightly increases with the A770 being 5% ahead. It would be easy to say this is due to the different VRAM configuration, most likely the speed rather than the capacity. However I have not fully tested this hypothesis and cannot confirm nor deny it for now.

Regardless, the A750 is now slightly faster than the RX 5600XT and could very well compete against an RX 6600 or an RTX 3060. Still, the price I paid is bad enough to make it worse value than the last two options mentioned, especially considering ARC still has a long road to mature.

Other Benchmarks

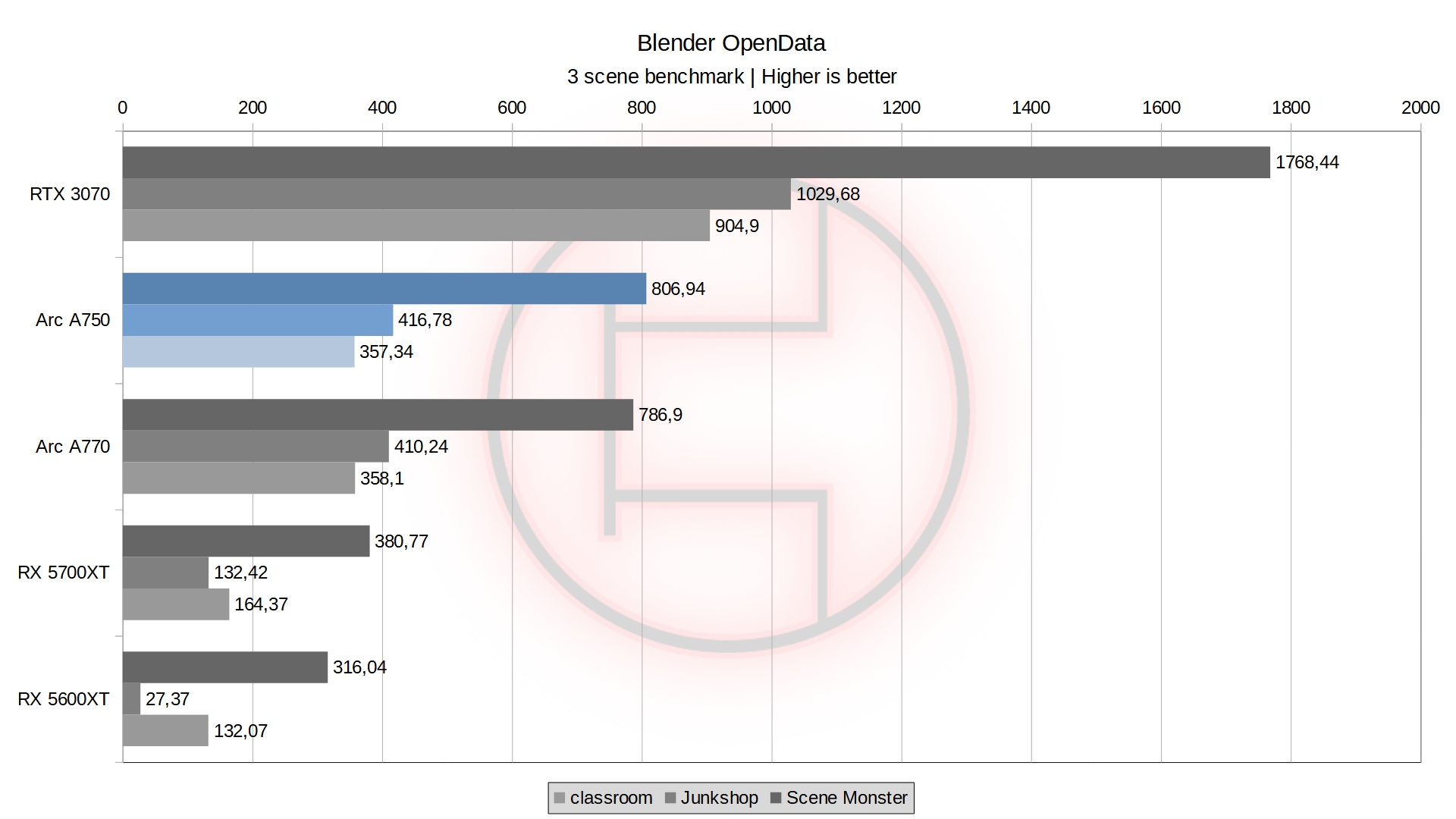

In a strange turn of events, the A750 somehow comes out just ahead of the A770 in Blender OpenData. The difference is so small that I’ll call it margin of error, but I still find it amusing that these are the results I got. I wish I had an RTX 3060 or an RX 6600 to compare the performance here, as the much lower price of the A750 locally could make it somewhat viable for this type of application.

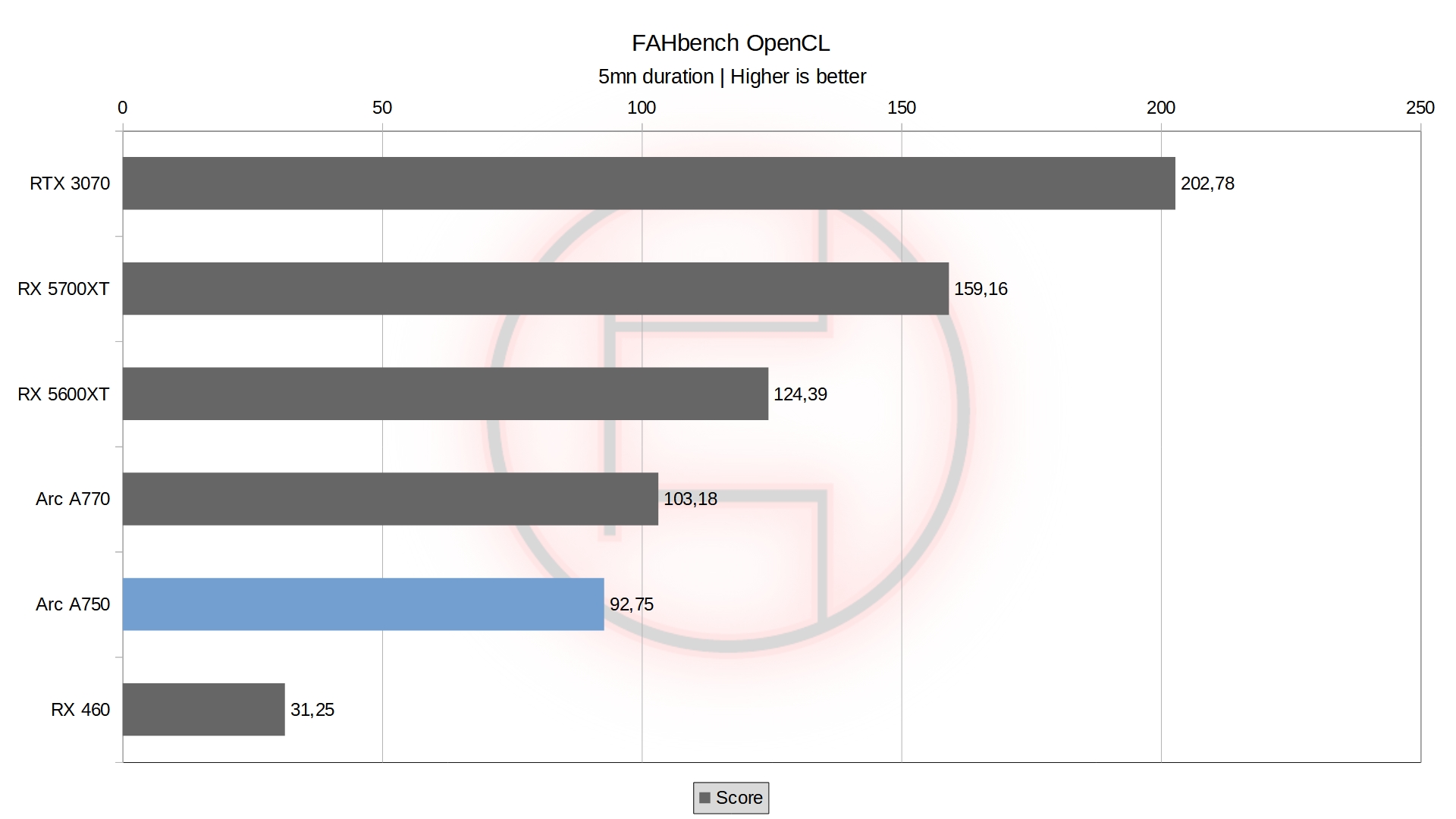

When looking at FAHBench for OpenCL performance however, the conclusion is the same as its bigger brother, it’s simply not worth it.

Temperatures, Fan Speed, and a Word on Noise

These are all values reported by HWinfo64 during a Cyberpunk 2077 benchmark to see how the card behaves when it comes to fans and temperatures.

Overall it is very similar to the A770 16GB. All temperatures are well below 80c for every component of the GPU measured here, and the fan speed is even lower than the A770 at under 1400 RPM. The A750 is both cooler and more relaxed when it comes to cooling than its bigger brother, which is nice to see.

On top of that, the fans went even lower than the A770 at idle. I was even able to get the fans to stop after leaving the PC on doing nothing for long enough. However, the idle temps are still high, as the lowest recorded here before I even started to open the game was still around 47-48c. I don’t believe Intel’s implementation of fan stop is an issue, since the fans are spinning low enough on idle to be considered “silent” for me. Even if they never stop, they are not disturbing or loud outside of games.

I did notice some coil whine as well, but not as bad as the A770, and again, highly dependent on the setup. I am not worried about it, and I will have to see in a full-time rig if it is present at all times.

Overall, the card using the same cooler as the A770 allows it to run in better conditions despite using the same power limit and frequency. This keeps making the A750 the obvious value choice here.

As a final note, you can see I added a value “GPU memory temperature” which turns out to be the exact same value as the GPU SoC temperature. Whether those are the same sensor, a reporting error, or they happen to heat up the same, I do not know yet.

Power Consumption

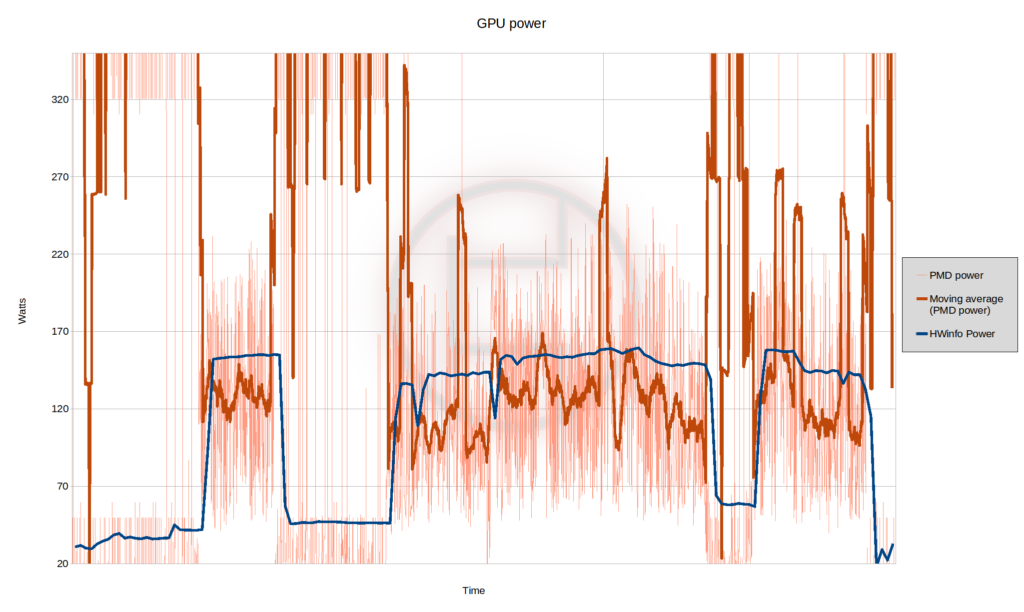

Yes, the eye-tearing graph is back. Let us analyze it before you can’t look at it anymore. This is power draw under a Cyberpunk 2077 benchmark

As said in the A770 review, the Elmorlabs’ PMD has an “issue” which causes it to measure absurd amount of power going through the cables (2500w+) which I believe are caused by voltage reading errors. This however only happens at idle as the graph shows there are almost no spikes when the GPU is under load as you can see with HWinfo power.

Even with those errors, we can trace out an average from the measured values to get a rough idea of power draw through the cables. Remember that the PMD does not measure PCIe power which is why I use HWinfo to compare.

HWinfo itself reports around 160w peak when running the benchmark. This is lower than the A770 by almost 30w, which is surprising considering both are supposedly the same TBP. The power draw through the cables is approximately 120-130w. Given VRM losses and PCIe power, we fall back on the same conclusion than the A770 : the driver and HWinfo report total GPU power.

This is very reasonable power draw for this class of GPU, my RX 5600XT draws just 150w according to HWinfo under gaming. One could pair this card with a decent 550w + power supply without issue. I do plan to try and power a full gaming system with that card and a good 450w power supply to see if it works.

An Actual Driver Issue ?

As mentioned earlier, I did encounter one issue that I can only attribute to the driver’s fault. When launching Red Dead Redemption 2 on the A750, I encountered an issue while running the benchmark. At a very specific time during the run, the computer would completely crash. At first I got a black screen and though it was only a hiccup and restarted it to try again. As it turns out, the next run would just invoke a blue screen at the exact same frame, again and again.

After a few runs, I looked on all the options I had to see if I could fix it to continue benchmarking the game. I tried to DDU the driver several times, reinstalled it, but the issue was still there. I tried verifying the files of the game, but that just didn’t help. I fiddled a bit with the graphic options of the game, as it seems that it was really only a specific frame of the benchmark that was causing issues for some reason.

I asked a friend to analyze the dump files windows created on each BSOD, all pointed to the Intel ARC driver dying. As a last attempt, I tried to update the bios of the motherboard to the latest version. Somehow, I managed to get a run to complete after first changing the game resolution before launching the benchmark after a system reboot.

However, that could only happen once as it failed with the same error every time I retried that theory. That is however how I managed to get a single run at 1920×1080 and 2560×1440 to complete. The performance outside of this issue is actually what I expected and there aren’t any other issues to note.

The last test I tried was just switching back to the A770 without changing the driver or anything. Not even a DDU or a game re-installation, just straight up changing the card and trying again. And it worked flawlessly every time I tried on the A770, with the exact same system as the A750.

While this could be an issue with the card itself, it did not show any other issue in any other game or load I put it through. And the fact that the error comes back every time at the same frame really makes me think of a driver issue more than a hardware issue.

Despite that, I didn’t encounter anything game breaking or worth noting in the entire review. It is nice to see that the drivers are decent early in the release.

Ending thoughts

Another clear conclusion from this review : the ARC A750 is much better value than the A770.

The performance difference is quite small : 3% at 1920×1080 and 6% at 2560×1440. I paid 80 euros more for the A770 and I can now see that it was not a good investment at all. The overall performance is still underwhelming however, barely performing better than an RX 5600XT outside of games running older APIs (see CS:GO and Rainbow 6 Siege benchmarks) and the prices in Europe are still very high. Again, I can find RX 6700 for just 20 euros more than the price I paid for that A750. Even in the US market, the RX 6600 is slightly ahead at 1920×1080 for the same if not cheaper price and has much more mature drivers and software.

At 2560×1440 however, the pictures could change a slight bit. It does perform better than my RX 5600XT and could be a worthy opponent to an RX 6600 or an RTX 3060. Having half the VRAM of the A770 does not impact it that much here. Just like its bigger brother, it does scale at higher resolution and bridges the gap with more powerful cards. However, the target audience for those cards is likely playing at 1920×1080, so this isn’t particularly useful.

I do prefer this model to the A770 overall for its slightly better value and better temperature and noise control. I was disappointed to have one bug with Red Dead Redemption 2, but considering this is the only issue I had reviewing both cards, I can say it wasn’t a bad experience.

It is a hard card to recommend, but I believe this could actually be decent value for those that aren’t afraid of early adopter issues.

For those looking for a safe pick however, an RX 6600 or an RTX 3060 is obviously a safer bet, even if it costs a bit more for Nvidia. I have to admit, I expected worse in terms of stability for a first gen product, and I am happy to be surprised I didn’t find more issues with the card.

Now, please excuse me while I try to make up for the horrible price I paid for mine and try to make that money worth it somehow.